In natural language processing, the constant pursuit of more efficient and precise text generation methods persists. Large language models, or LLMs, have dazzled us with their prowess in text generation and language translation. However, a pressing question arises: can we trust them to always provide accurate and informative responses?

Meet retrieval-augmented generation (RAG), a game-changing technique that tackles this challenge head-on by seamlessly integrating real-world knowledge with LLM capabilities!

In this article, we cover the impact of retrieval-augmented generation (RAG) on large language models (LLMs). We examine how it enhances comprehension, improves response coherence, and ultimately fosters more reliable and impactful applications across various professional and educational settings.

Understanding the RAG Framework and Redefining Text Generation

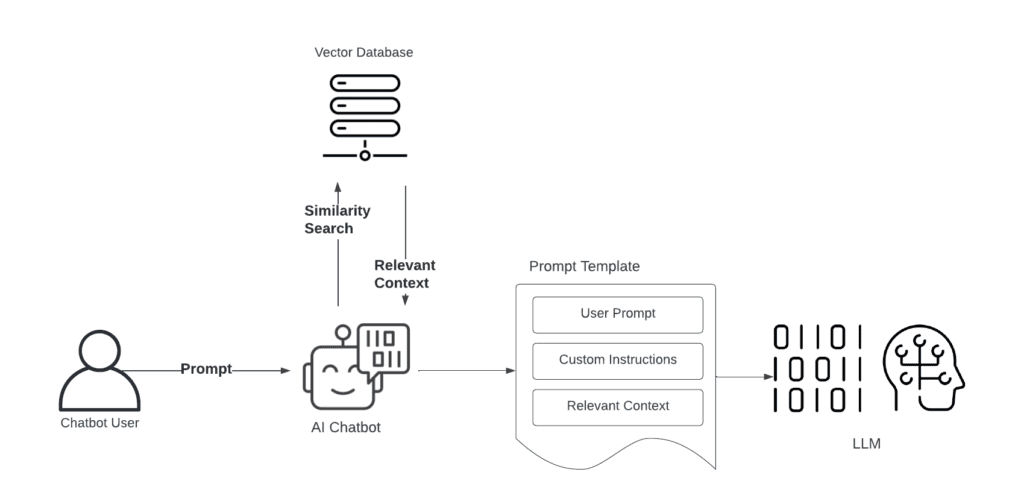

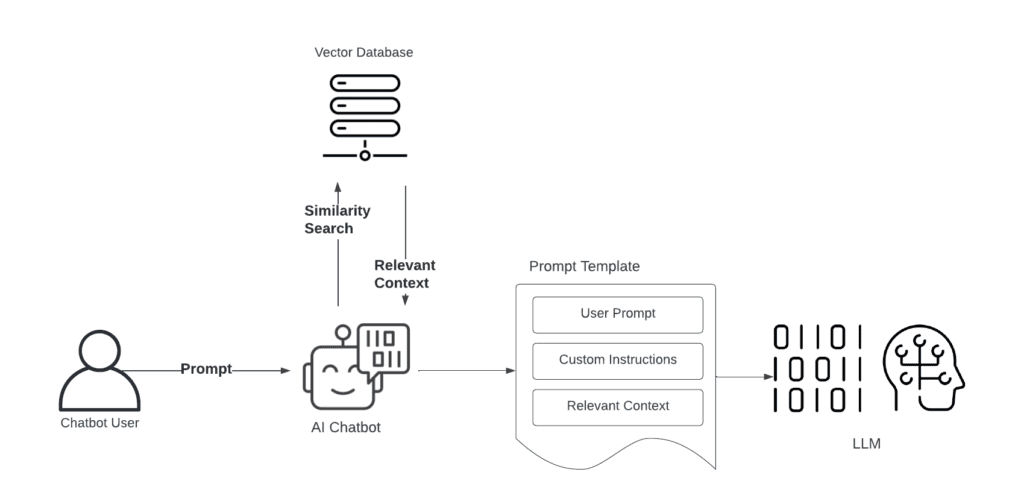

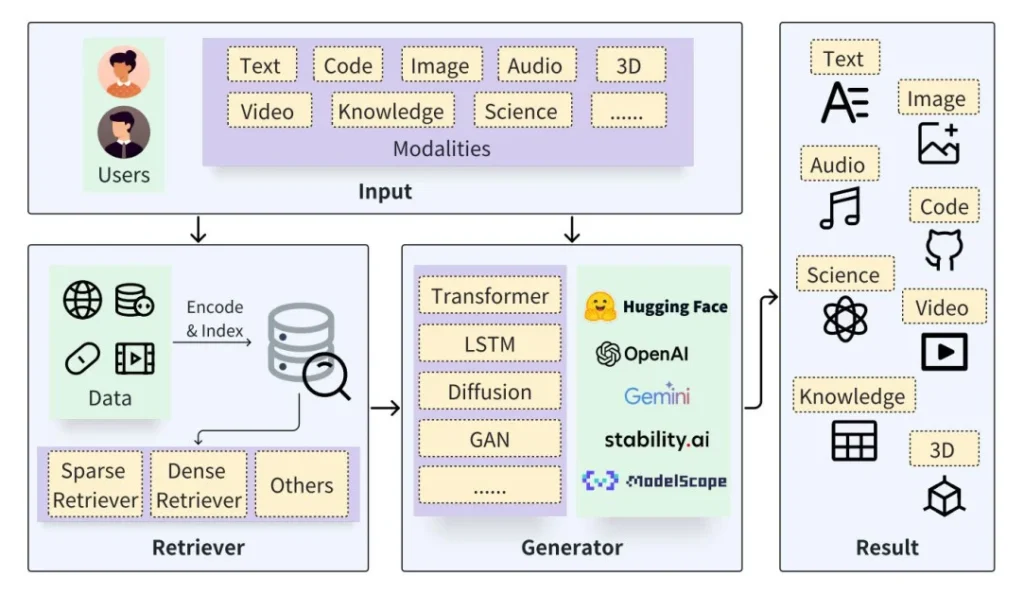

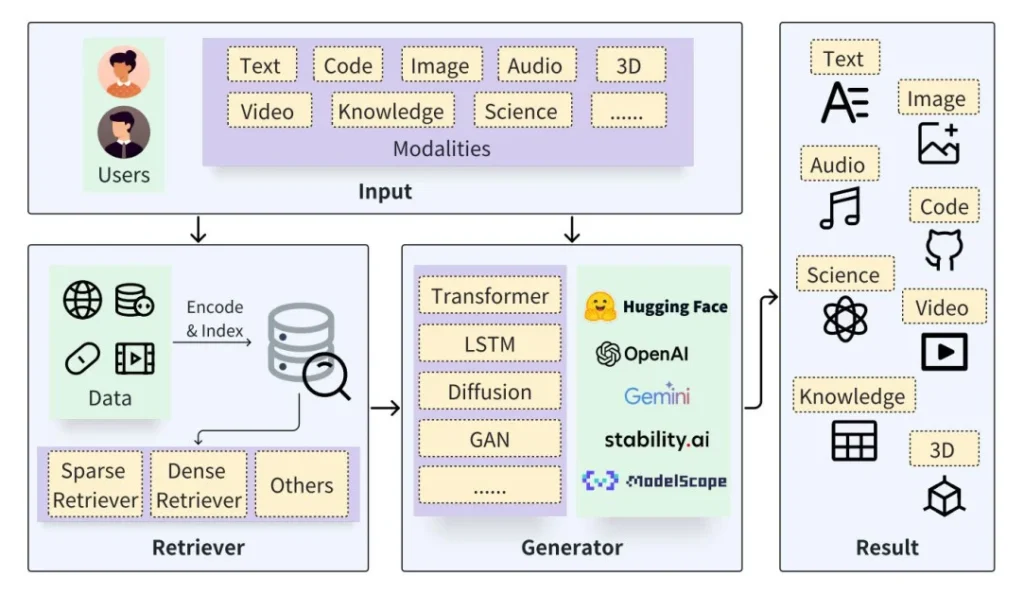

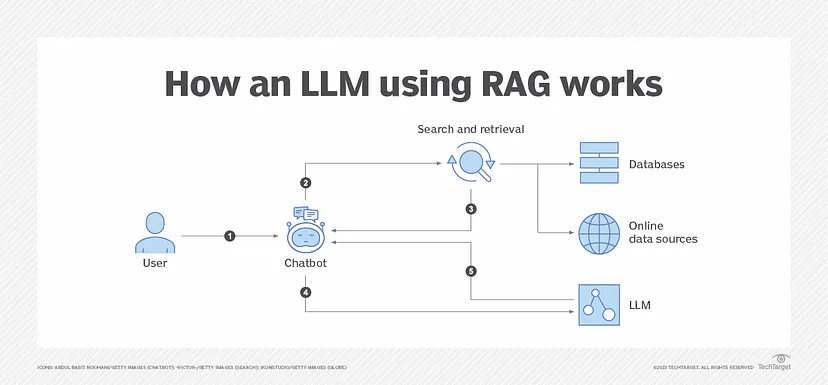

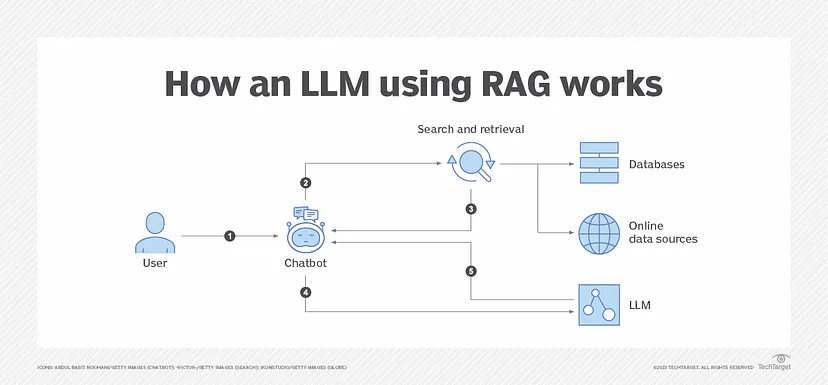

Retrieval-augmented generation (RAG) revolutionizes text generation by merging retrieval mechanisms with language models. Unlike traditional models, RAG accesses external knowledge, enhancing contextual relevance and precision. This fusion empowers large language models (LLMs) to draw insights from diverse sources, propelling a new era in text generation. As a result, with RAG, language models surpass data limits, creating nuanced content, shaping a future where high-quality generation becomes standard.

The impact of retrieval-augmented generation (RAG) transcends theory, finding practical applications across various domains. Take customer service chatbots, for instance, which have long been plagued by generic responses. With RAG, these chatbots gain access to a wealth of information. This includes product manuals, FAQs, and past customer interactions. This enables them to provide personalized and accurate solutions.

In education, RAG-enhanced tutoring systems provide contextual explanations by referencing online resources or textbooks. Moreover, RAG promotes trust and transparency by disclosing sources, akin to a research assistant. This transparency is crucial in sectors like healthcare or finance, where reliable information is essential for critical decisions.

Raising the Bar: Empowering Language Models with the Role of RAG

The field of natural language processing (NLP) is rapidly transforming. This transformation is driven by the growing demand for nuanced, contextually-rich language models.

The race for true language mastery takes a revolutionary leap forward with retrieval-augmented generation. RAG cracks open the potential for AI to understand and respond with the same depth and accuracy as a human wielding the vast knowledge of the world. This approach promises to not only elevate the bar for LLMs, but also enable them to overcome limitations and generate responses that demonstrate deeper understanding.

For instance, RAG-powered chatbots could provide more informative and helpful customer service. They can leverage retrieved product information to tailor responses to specific inquiries.

1. Enhanced Contextual Understanding

The integration of retrieval-augmented generation (RAG) into large language models (LLMs) offers a great advantage: a heightened contextual understanding of input prompts. By tapping into external sources, RAG-enhanced LLMs can contextualize generated text more effectively, resulting in outputs that are not only coherent but also remarkably informative and accurate. This contextual enrichment proves invaluable in tasks like question answering, where precise comprehension of queries is crucial. RAG elevates LLMs by infusing them with real-world knowledge, facilitating the delivery of contextually relevant responses. Equipped with RAG, LLMs gain access to a vast wealth of information, thus enabling them to grasp nuances with precision, ultimately leading to more insightful outputs.

2. Improved Response Coherence

Through the integration of retrieved knowledge, RAG enhances the coherence of responses generated by LLMs. By selecting and incorporating relevant information from external sources, RAG ensures that generated outputs maintain logical flow and consistency with the provided context. This approach mitigates the risk of generating contradictory or nonsensical responses, resulting in more coherent and logically sound outputs that align closely with user expectations.

3. Augmented Factual Accuracy

RAG significantly bolsters the factual accuracy of responses produced by LLMs. By leveraging external knowledge sources during the generation process, RAG-equipped models can validate and supplement their outputs with verified information. This additional layer of fact-checking helps mitigate the propagation of misinformation or inaccuracies, ensuring that generated responses are not only coherent but also factually reliable. As a result, RAG contributes to the overall trustworthiness and credibility of LLM-generated content in various applications, including educational and professional domains.

RAG Challenges: From Scalability to Bias Mitigation in AI Deployment

Retrieval-augmented generation (RAG) is a powerful technique that combines both retrieval and generation capabilities in large language models (LLMs) to enhance their performance. However, like any approach, RAG also faces several challenges and obstacles:

- Scalability and Efficiency: Retrieving relevant information from large corpora poses computational challenges, necessitating efficient mechanisms to ensure real-time performance and scalability, especially with massive datasets.

- Balancing Relevance and Diversity: RAG models must delicately balance generating responses relevant to queries while ensuring diversity to avoid redundancy. Achieving this balance demands meticulous tuning of parameters and retrieval strategies.

- Noise Filtering: Large corpora often contain noisy or irrelevant documents that can disrupt the retrieval process. RAG models require robust mechanisms to filter out such noise, prioritizing the retrieval of pertinent information.

- Fairness and Bias Mitigation: Like other LLMs, RAG models are susceptible to biases inherent in training data. Ensuring fairness and mitigating biases in both retrieval and generation processes are crucial for responsible AI deployment.

In tackling these challenges, RAG benefits from employing innovative algorithms and distributed computing methods, enabling swift access to pertinent data within extensive corpora. Moreover, integrating advanced machine learning-based filtering mechanisms enhances noise reduction, refining data precision, and fortifying RAG system efficacy.

The Power of Retrieval-Augmented Generation and a Brighter Future for RAG-powered LLMs

Despite challenges in scalability, efficiency, and bias mitigation, ongoing research propels the evolution of RAG. As we refine RAG’s capabilities, envision a future where RAG-powered LLMs deliver contextually rich responses, prioritizing fairness and accuracy, expanding possibilities in natural language processing and beyond.

“In summary, retrieval-augmented generation synergizes the strengths of retrieval-based and generative models to elevate the precision and relevance of the generated text. By harnessing the accuracy of retrieval-based models for information retrieval and the creative capabilities of generative models, this approach fosters more resilient and contextually grounded language generation systems.” as stated in Decoding RAG: Exploring its Significance in Generative AI.

Check out one of our recent AIAW Podcast episodes featuring Jesper Fredriksson, delving into RAG models and autonomous AI agents. Jesper explores their potential of these advanced AI systems in transforming industries. A must-listen for anyone interested in the cutting-edge of AI technology!

For the newest insights in the world of data and AI, subscribe to Hyperight Premium. Stay ahead of the curve with exclusive content that will deepen your understanding of the evolving data landscape.

Add comment