As Retrieval-Augmented Generation (RAG) models keep on advancing, their impact spreads across the NLP community. These models are fascinating, sitting at the intersection of natural language processing (NLP) and information retrieval. Moreover, they hold the potential to redefine our interactions with technology and each other.

RAG refines information synthesis and not only leverages context and relevance, but also promotes richer and contextually aware outputs. RAG serves as an AI framework, providing relevant data as context for generative AI (GenAI) models. It enhances the quality and accuracy of GenAI and LLM output. But how does it achieve this?

In this article, we cover the core of the retrieval-augmented generation (RAG) approach. Moreover, we underscore the tangible real-world applications and the crucial role they play in advancing language models and society at large!

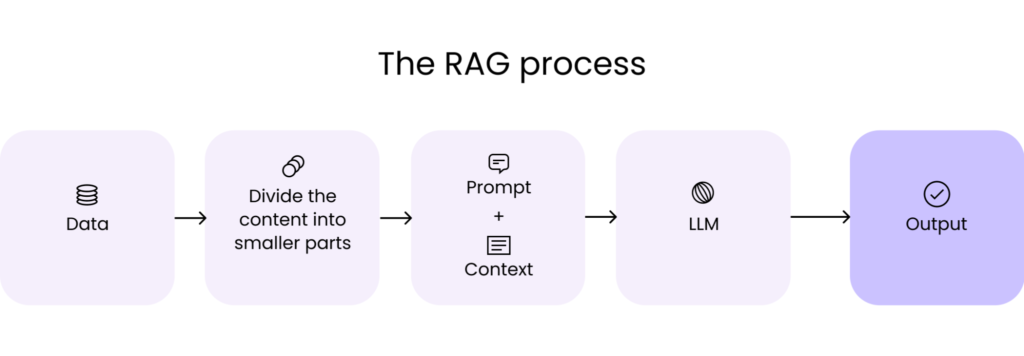

The Process of Retrieval-Augmented Generation: What is RAG?

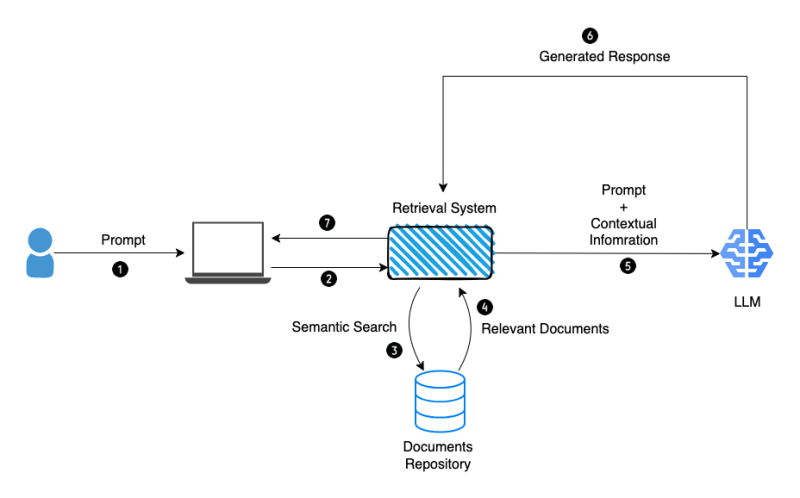

Retrieval-Augmented Generation (RAG) is the process of optimizing the output of a large language model, by blending the strengths of large language models (LLMs) with contextual information retrieval from external sources. As a result, this synergy leads to responses that surpass conventional text generation limits, indicating a shift in natural language processing.

RAG retrieves supporting documents, similar to Wikipedia, links them with the input prompt, and feeds them to the text generator for adaptive output. Unlike with static LLMs, RAG provides up-to-date information without retraining, ensuring reliable outputs. Therefore, by integrating knowledge sources like encyclopedias and databases, RAG enhances content accuracy and reliability in a cost-effective manner, presenting a robust solution to language model hallucination challenges.

RAG enables the LLM to access up-to-date, brand-specific information so that it can generate high-quality responses. In a research paper, human raters found RAG-based responses to be nearly 43% more accurate than answers created by an LLM that relied on fine-tuning.

The Significance of RAG Models

RAG’s impact on NLP is profound. It has revolutionized how AI systems interact, understand, and generate human language. In the same way, RAG has been crucial in making language models more versatile and intelligent with use cases ranging from sophisticated chatbots to complex content creation tools. Retrieval-augmented generation bridges the gap between the static knowledge of traditional models and the ever-changing nature of human language. Some of the key components of RAG are:

- RAG merges conventional language models with a retrieval system. This hybrid framework enables it to generate responses by leveraging acquired patterns and retrieving relevant information from external databases or the internet in real time.

- Subsequently, RAG has the capability to dynamically tap into numerous external data sources. This functionality enables it to fetch the latest and most relevant information, enhancing the accuracy of its responses.

- Finally, RAG integrates deep learning methodologies with intricate natural language processing. This fusion facilitates a deeper comprehension of language subtleties, context, and semantics.

According to a survey, large language models (LLMs) demonstrate significant capabilities but face challenges such as hallucination, outdated knowledge, and non-transparent, untraceable reasoning processes. RAG has emerged as a promising solution by incorporating knowledge from external databases. This enhances the accuracy and credibility of the models and allows for knowledge updates and integration of domain-specific information.

Seven Real-World Applications of Retrieval-Augmented Generation (RAG) Models

Retrieval-augmented generation models have demonstrated versatility across multiple domains. Some of the real-world applications of RAG models are:

1. Advanced question-answering systems

RAG models can power question-answering systems that retrieve and generate accurate responses, enhancing information accessibility for individuals and organizations. For example, a healthcare organization can use RAG models. They can develop a system that answers medical queries by retrieving information from medical literature and generating precise responses.

2. Content creation and summarization

RAG models not only streamline content creation by retrieving relevant information from diverse sources, facilitating the development of high-quality articles, reports, and summaries, but they also excel in generating coherent text based on specific prompts or topics. These models prove valuable in text summarization tasks, extracting relevant information from sources to produce concise summaries. For example, a news agency can leverage RAG models. They can utilize them for automatic generation of news articles or summarization of lengthy reports, showcasing their versatility in aiding content creators and researchers.

3. Conversational agents and chatbots

RAG models enhance conversational agents, allowing them to fetch contextually relevant information from external sources. This capability ensures that customer service chatbots, virtual assistants, as well as other conversational interfaces deliver accurate and informative responses during interactions. Ultimately, it makes these AI systems more effective in assisting users.

4. Information retrieval

RAG models enhance information retrieval systems by improving the relevance and accuracy of search results. Furthermore, by combining retrieval-based methods with generative capabilities, RAG models enable search engines to retrieve documents or web pages based on user queries. They can also generate informative snippets that effectively represent the content.

5. Educational tools and resources

RAG models, embedded in educational tools, revolutionize learning with personalized experiences. They adeptly retrieve and generate tailored explanations, questions, and study materials, elevating the educational journey by catering to individual needs.

6. Legal research and analysis

RAG models streamline legal research processes by retrieving relevant legal information and aiding legal professionals in drafting documents, analyzing cases, and formulating arguments with greater efficiency and accuracy.

7. Content recommendation systems

Power advanced content recommendation systems across digital platforms by understanding user preferences, leveraging retrieval capabilities, and generating personalized recommendations, enhancing user experience and content engagement.

The Impact of Retrieval-Augmented Generation (RAG) on Society

Retrieval-augmented generation (RAG) models are poised to become a transformative force in society, paving the way for applications that unlock our collective potential. These tools go beyond traditional large language models by accessing and integrating external knowledge, enabling them to revolutionize the way we communicate and solve problems. Here’s how RAG models promise to shape the future:

- Enhanced communication and understanding: Imagine language barriers dissolving as RAG models translate seamlessly, incorporating cultural nuances and real-time updates. Educational materials can be personalized to individual learning styles, and complex scientific discoveries can be communicated effectively to the public.

- Improved decision-making: Stuck on a creative block? RAG can brainstorm solutions, drawing on vast external knowledge bases to suggest innovative approaches and identify relevant experts. This empowers individuals and organizations to tackle complex challenges with efficiency and effectiveness.

- Personalized experiences: From healthcare to education, RAG models can tailor information and recommendations to individual needs and preferences. Imagine AI assistants suggesting the perfect medication based on your medical history or crafting a personalized learning plan that accelerates your understanding.

Navigating the Future of RAG Models

As we navigate the future, RAG models stand as a testament to their potential to reshape how we interact, learn, and create. While their applications offer exciting possibilities, addressing ethical considerations and overcoming challenges will be crucial in realizing their full potential in a responsible manner.

An article for a guide to retrieval-augmented generation language models states: “Language models have shown impressive capabilities. But that doesn’t mean they’re without faults, as anyone who has witnessed a ChatGPT “hallucination” can attest. Retrieval-augmented generation (RAG) is a framework designed to make language models more reliable by pulling in relevant, up-to-date data directly related to a user’s query.”

In a recent episode of the AIAW podcast, industry expert Jesper Fredriksson shares insights into RAG models applied to autonomous AI agents. Furthermore, Jesper discusses RAG models in the automotive industry (Volvo), autonomy evolution, as well as language models’ coding abilities.

For the newest insights in the world of data and AI, subscribe to Hyperight Premium. Stay ahead of the curve with exclusive content that will deepen your understanding of the evolving data landscape.

Add comment