Do we truly understand the nature of business risks associated with AI?

That is a question that has been on the minds of decision-makers since the return of ML and AI in the business solution arena, more than a decade back. And yet, there is no definitive answer to this question.

It is not that AI-related risks are not understood by the decision-makers. In fact, many large organizations are investing heavily in research and development on AI Risk Management. The key issue is that risk is a very broad concept with interlinkages and dependencies to every component that goes in the analysis, design, development, commercialization, and operation of AI solutions. And therefore, while there is deep risk coverage in some siloed respects like model interpretability, representational bias etc, Algorithmic Risk Management as a unified concept is not very well understood in the industry. Moreover, there is an urgent need to understand the risks associated with AI from a strategic and business perspective instead of just technical.

The key motivations for a comprehensive understanding of risk are:

- Due to the nature and impact of AI systems, risks can spread across the entire value chain very fast and with unbounded impact if not properly contained and mitigated.

- Risk mitigation cannot be done on a purely technical basis (which is the current understanding and focus of most research in AI risk management). There is always ‘residual risk’ from technical measures of risk management that need to be managed and mitigated using commercial measures.

The traditional understanding of risk and risk management from business and IT is limited and often dangerously wrong when applied AS-IS in the AI domain. In traditional business and IT, we make explicit business rules, and these rules are mutually exclusive.

This means, for example, a cup of cappuccino cannot be both size M and L at the same time. Therefore ‘Large Cappuccino’ and ‘Medium Cappuccino’ are represented as mutually exclusive entities in IT. In traditional decision-making, there is a series of linear elimination of alternatives down any decision chain. Once we have chosen a particular decision branch (say size M), all other branches at the same level of hierarchy (S and L) become unavailable.

But in AI, the decisions are represented as ‘zones’ defined by arbitrary ‘hyperplanes’ within the feature space created by the ML/DL model and therefore some rules could partially overlap e.g. ‘Size of the cup’ is an independent feature like the ‘Ratio of coffee to milk in different coffee types’. A slight change in the input parameter (amt. of coffee) might shift the output to a different decision zone (e.g. from M to L because more coffee requires more milk in cappuccino and more milk corresponds to a larger cup size.) This cannot be explained by our linear logic. An AI solution would require a different kind of risk analysis e.g. sensitive analysis on the series of inputs becomes very important (how much can we increase the quantity of coffee within size M).

The risk management needed for AI defers from that in traditional business and IT. To continue with the coffee example, in traditional IT, we will create a rule limiting the maximum amount of coffee for size M based on the first principles (size of the paper cup to avoid overflow) or expert opinion (to avoid bad taste due to higher concentration). The concept of a machine serving L while we have ordered M is impossible in IT logic.

But in AI, the purpose of this rule would be to avoid misclassification of output leading to loss of value or customer satisfaction (e.g. serving a Large coffee while the customer has ordered and paid for a Medium). Risk Management in AI is different both conceptually and in implementation.

In this article, we attempt to understand risk management as a unified concept and discuss a 6-step risk management process using algorithmic risk management tools.

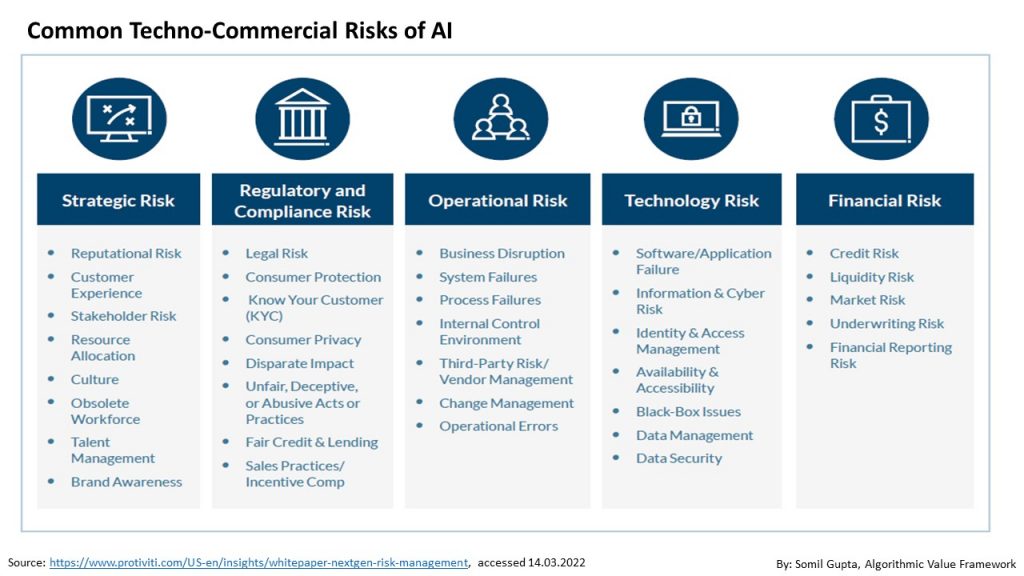

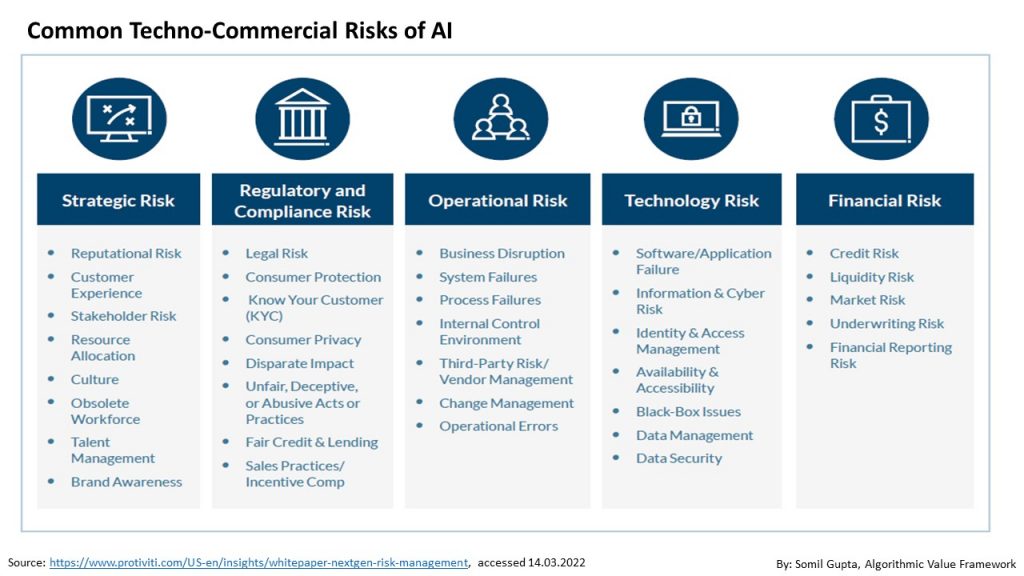

To start with, let’s have a look at a quite comprehensive compilation of AI risks compiled by (from Protiviti [1] ).

Based on the co-creation, we have also identified some other sources of risks like:

- AI governance risk (MLOPs/DLOPs) as a risk category required to tackle version control, validation, performance, and monitoring of these AI models by various tools, resource planning and governance frameworks and practices

- User Competence Risk as the need for training and coaching for the users of AI-based solutions to overcome scepticism

- Leadership Risk as business leadership’s attitude, inertia, and lack of motivation towards Data Science and AI

- Run-away Loss Risk as erroneous decisions are made and repeated at scale due to lack of identification, analysis, and mitigation

These risks can be identified, assessed, and mitigated using a comprehensive risk management framework using a six steps approach.

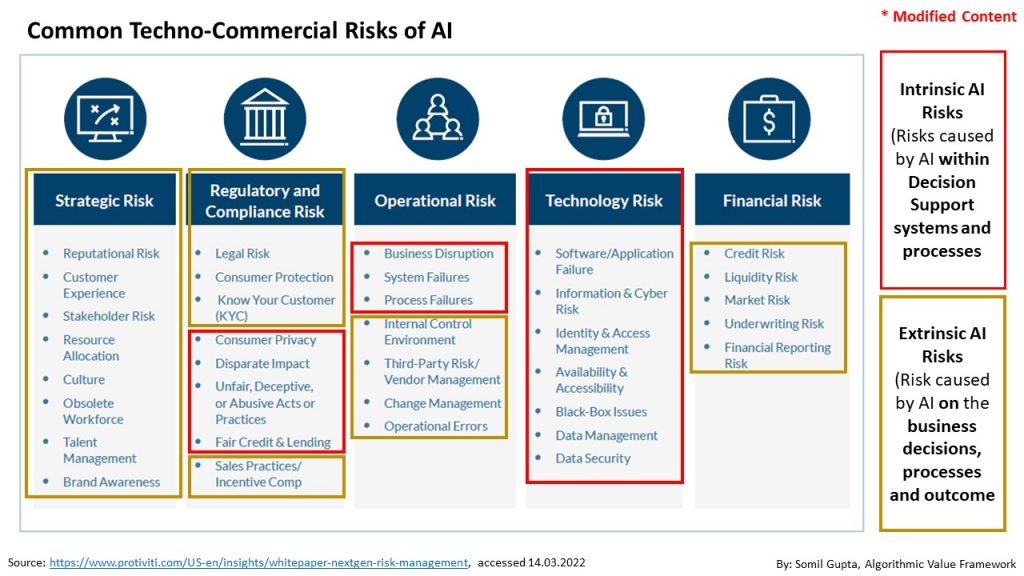

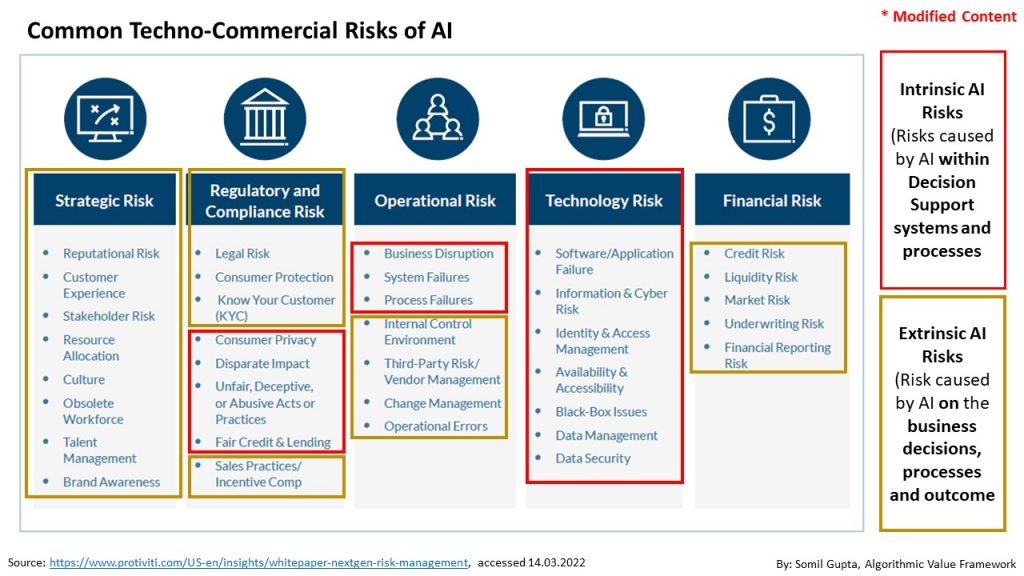

Step 1: Risk Classification

The first step is to classify them into intrinsic and extrinsic risks to understand their nature and design risk mitigation and control mechanisms.

– Intrinsic AI Risks are risks caused by AI within Decision Support systems and processes (Data, Model, Fairness, Interpretability)

– Extrinsic AI Risks are risks caused by AI on the business decisions, processes, and outcome (financial, social, market, etc)

Considering this, an ideal risk management framework should balance risk control mechanisms across both technical and commercial dimensions for both types of risks.

Step 2: Risk Management Framework

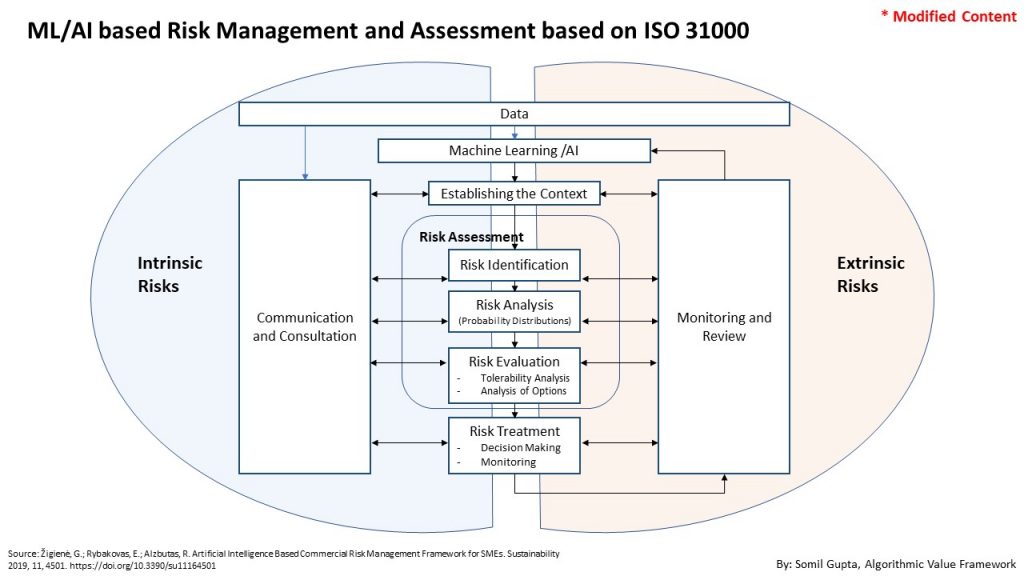

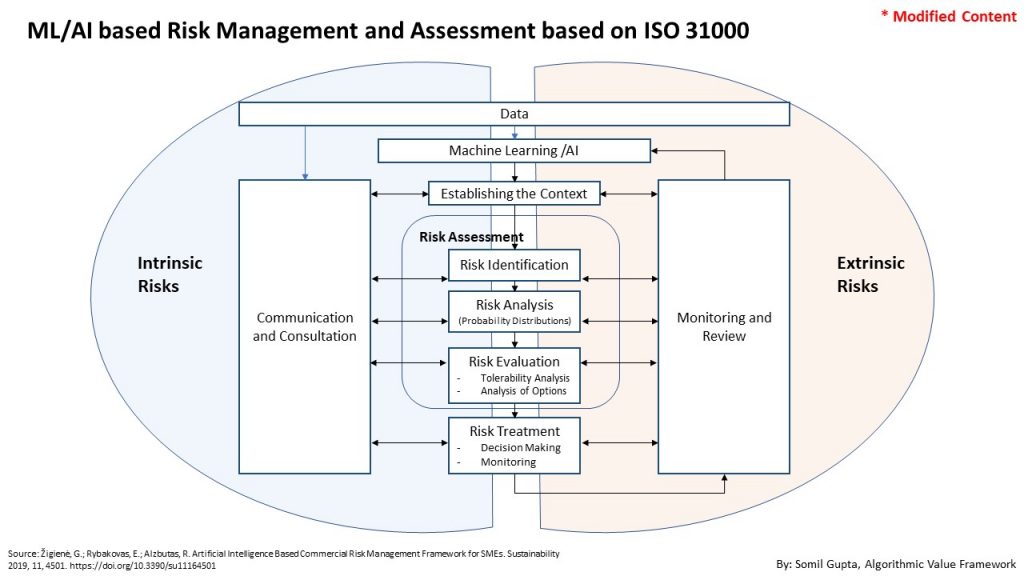

The second step is to define a risk management framework for managing both intrinsic and extrinsic risks of AI in an integrated and structured way. The research paper by Žigienė [2], suggests a 4-step risk management framework using AI based on ISO 31000.

- Risk Identification: Est. the probability of an event A with negative commercial consequences based on statistical/ML and AI methods

- Risk Analysis: Quantify outcomes or scenarios of expected negative consequences

- Risks Evaluation: Prioritize risks based on impact

- Risk Mgmt: Develop risk control, reduction, mitigation measures

Step 3: Assessing the Dimensions and Measures for Managing Intrinsic Risks

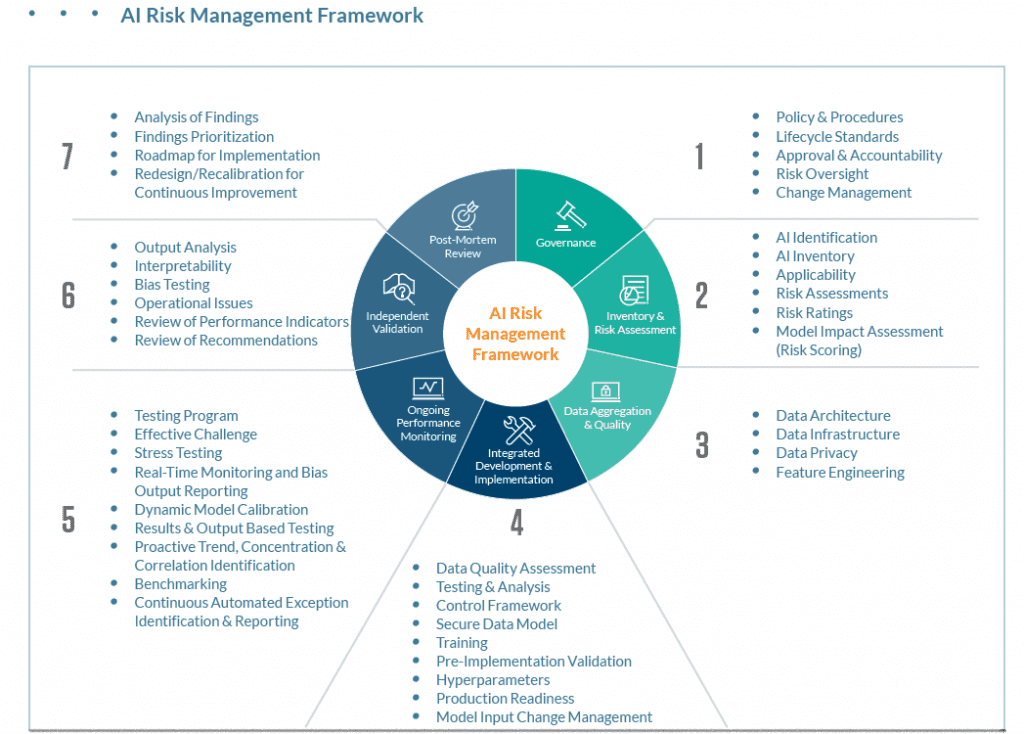

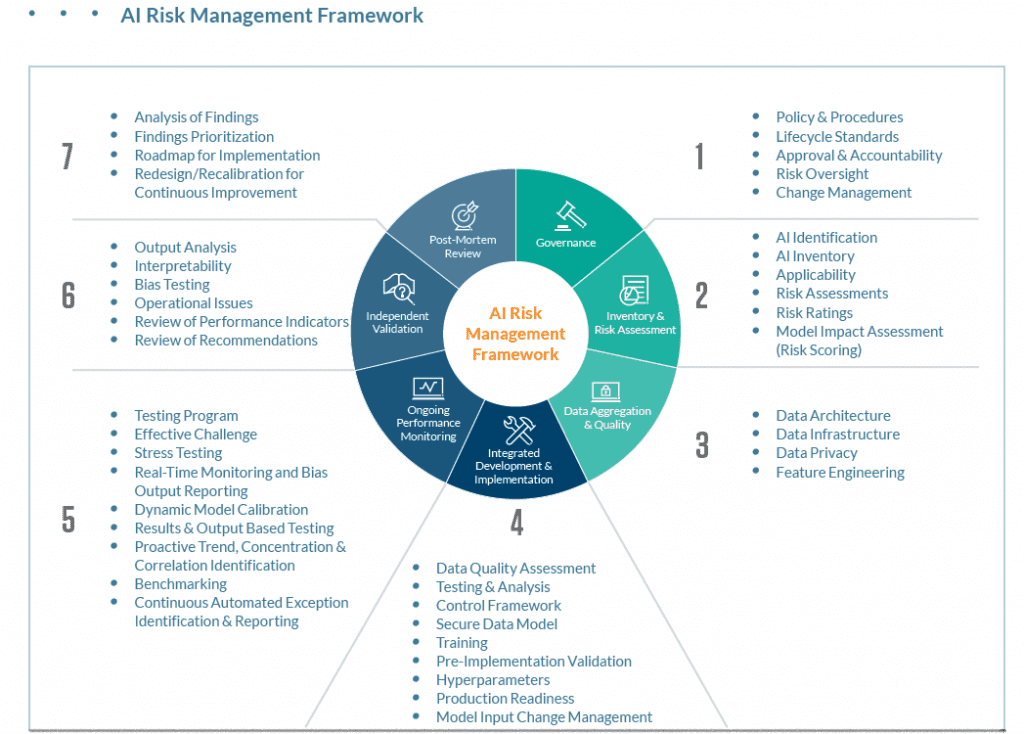

Once again, the Risk Management framework from Protiviti[1] is one of the most comprehensive that I’ve come across that covers the risk across the data, modeling, testing, Ops, and governance aspects.

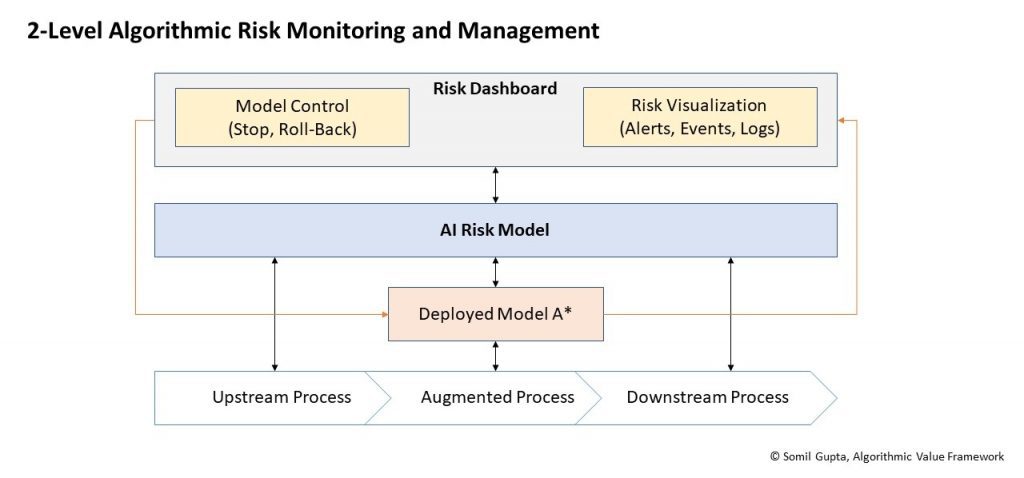

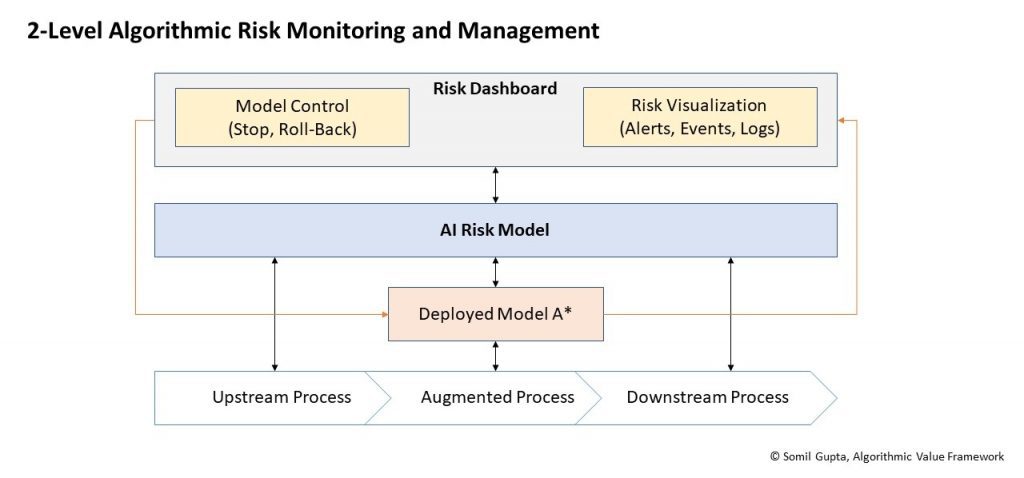

The only area where I probably differ from Protivity is that I believe that risk management must be automated using 2-level monitoring.

There are essentially 3 levels of intelligence at work here:

- AI* is the actual deployed model that is deployed in a live business process and need to be monitored for risk.

- AI Risk Model is the ML/AI-based model that ingests process data streams from upstream and downstream process and compares AI predictor outputs with the “Normal Operating Values” to assess deviations and calculates probabilities of various negative outcomes based on predetermined Commercial Risk Assessment Model. If the deviations are large and/or the probability of negative outcomes exceeds a predetermined threshold, it raises risk flags and sends to the dashboard.

- Risk Dashboard is a consolidated log and visualization of risk events detected and/or predicted by the AI RIsk Model for different Deployed AI* models. This dashboard must have the capability to stop deployed AI* models, take them offline or roll back to the last known safe model. The level of intelligence built into the Risk dashboard will depend upon the complexity and stability of the process being automated.

A working AI solution for Risk Management shall be based on the set of tools, including statistics, analytics, scenarios, value-at-risk (VAR), maximum loss etc. And all the potential risk drivers are modeled with a combination of ML models. E.g. Supply chain risk like delayed cargo dispatch and consequent inventory stockouts (like the one IKEA is facing right now) requires a model based on GPS and other tracking data. While risk of delayed payments or default would require a model based on credit ratings.

Finally, the risk estimates themselves must be presented as risk distributions that show a full range of the possible risk in order to make informed and balanced decisions instead of point estimates so prevalent in the industry.

Step 4: Assessing the Dimensions and Measures for Managing Extrinsic Risk

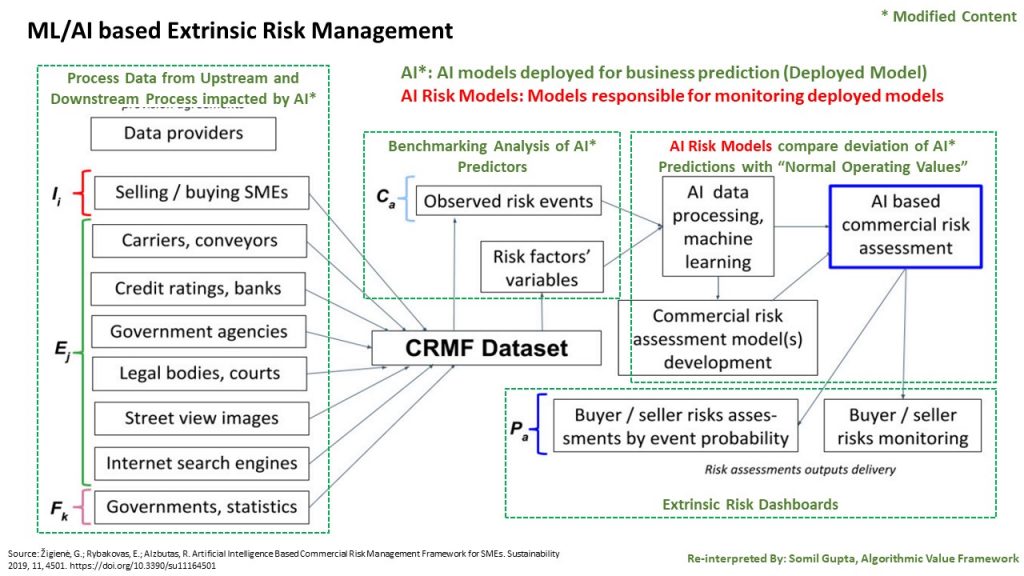

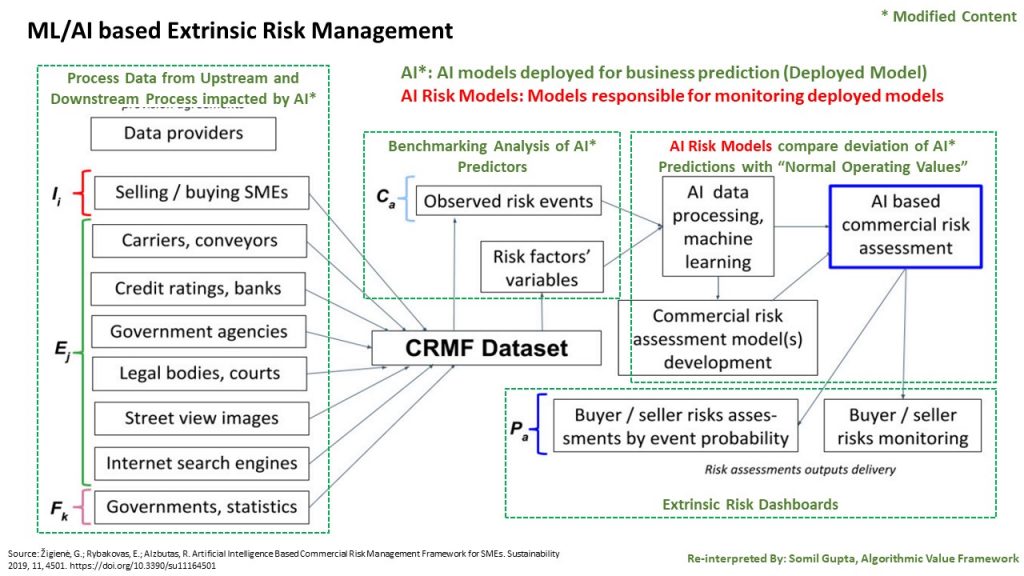

The research paper by Žigienė [2] also defines the AI-based risk management model for Extrinsic Risk Management that I have superimposed with the same 2-level risk management model discussed in the previous step.

Consider a process that is automated/augmented by an operational AI-based system (AI*) that takes input data from the upstream processes and outputs predictions that flow into the downstream processes. The task at hand is to manage the extrinsic risk of this deployed AI* model that can detect/predict negative outcomes and risks caused by AI* on the business decisions, processes and outcome.

The figure above describes the conceptual model proposed by the author. The volume and variety of data needed for extrinsic risk management varies based on the complexity and scope of the process. Effective management of extrinsic risks requires a strategic approach to value chain partners from the beginning. We also need a significant about of labelled data with ‘observed risk events’. This will require a distributed measurement of deviations, abnormal events, and other hazardous situations on key process variables across the chain. Finally, organizations need a complete portfolio of risk models to identify different types of risk. And since this requires time and resources, organizations must define their key risk drivers to determine their risk model roadmap in early stages.

Step 5: Risk Scoring and Impact Assessment

While intrinsic risks are managed and mitigated within the technical solutions developed by Data and AI experts, the extrinsic risks and ‘residual risks’ (risks that cannot be successfully contained and mitigated by technical and operational measures) require a commercial strategy and framework.

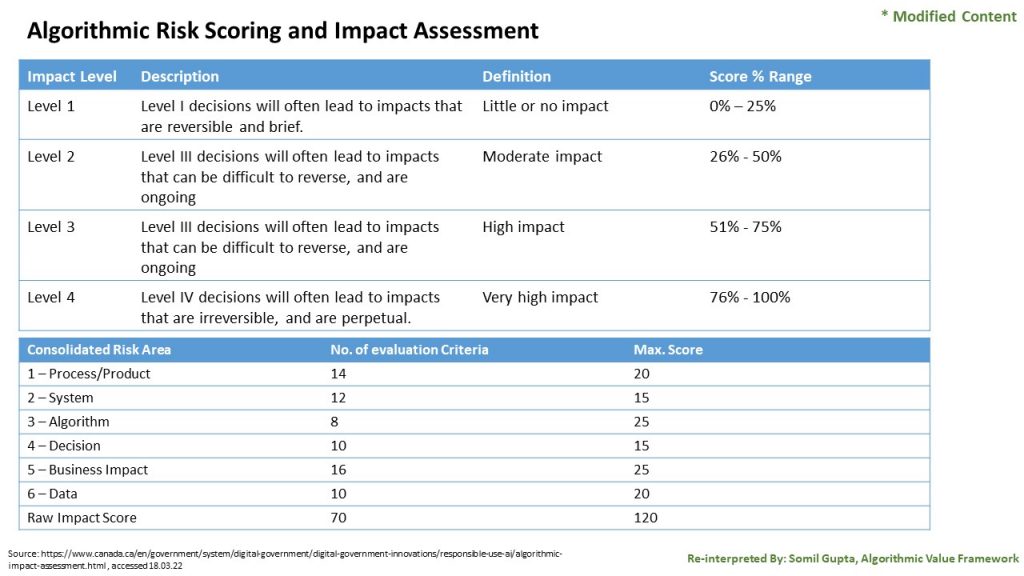

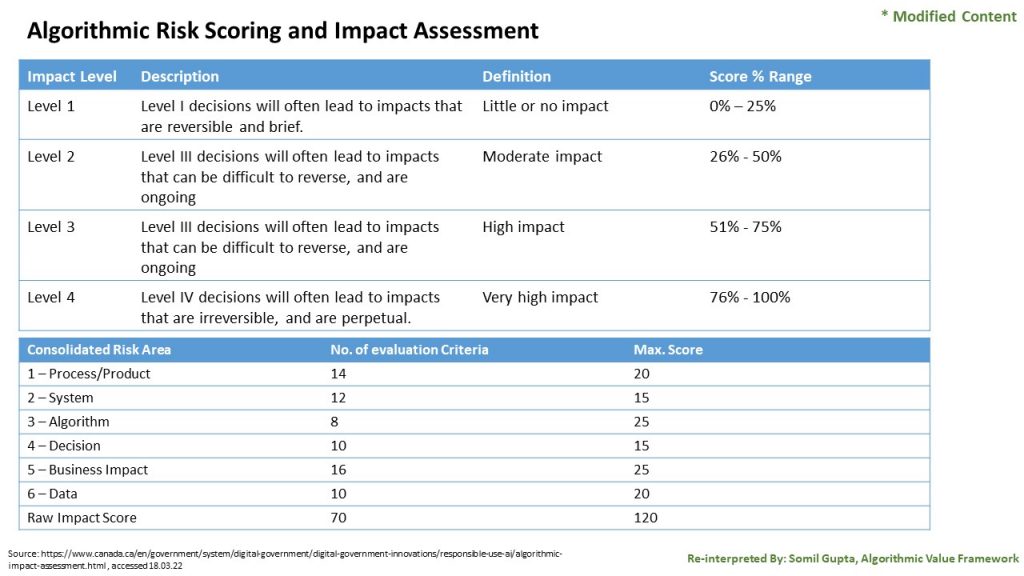

A simple tool for assessing the impact of extrinsic and residual risk has been developed by the Canadian government that not only allows us to measure the risk but also frame a governance strategy to monitor and manage those risks.

The framework in its simplest form consists of a 2-step process for risk assessment that can later be used for risk measures and governance.

- A set of risk area and risk evaluation criteria represented as a simple scoring tool that calculates ‘risk scores’.

- The risk scores are then divided into risk levels and given specific meaning based in their impact on the business or people.

Step 6: Risk Governance

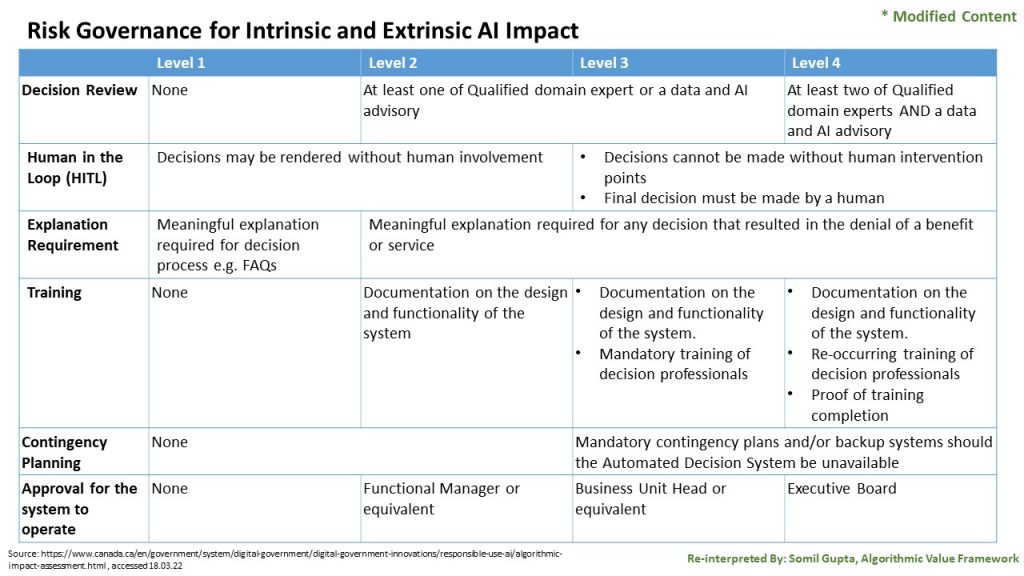

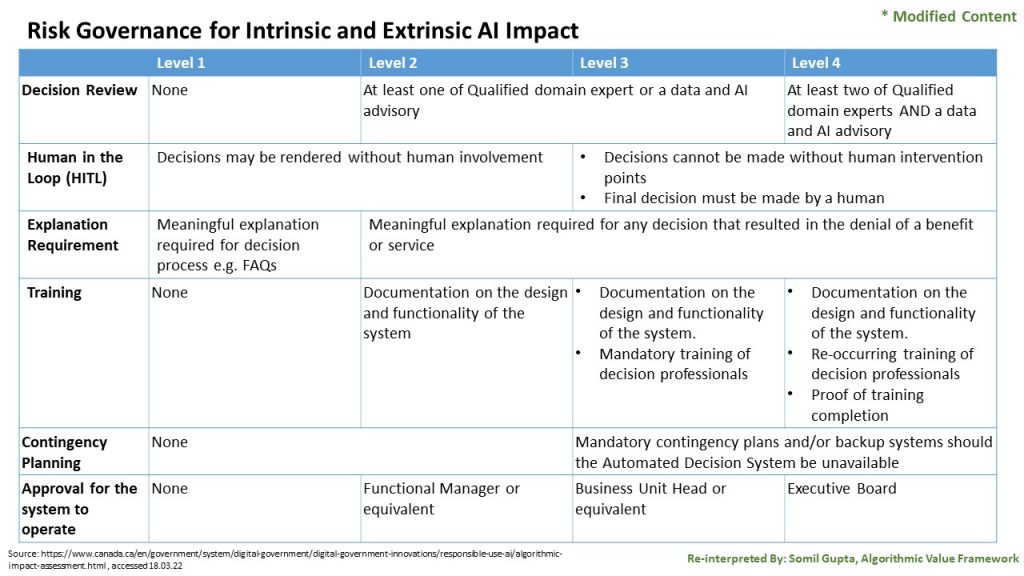

Building upon the risk scores in step 5, the governance measures are defined for each risk level. As evident from the table below, these risk measures are ‘over and above’ the technical measures. While being simple, the framework is quite powerful if implemented correctly and can be adopted by anyone with little hassle. The real work lies in identifying the risk area, evaluation criteria, and governance measures most suitable for the company.

Need for culture of Risk Tolerance on Unified Risk Management

In many organizations, there is a misunderstanding between risks and hazards and are used synonymously. When people say risk, they really mean hazards. Due to this, risks are treated as Pandora’s box better left unopened or there is unwillingness to assess risks systematically and holistically. In other cases, risk management is delegated to data and AI teams under the false impression that risks ‘originate’ from the models. In truth, most models are quite well-behaved and gentle during design and development. The risks result from the interaction of models with the real-world processes. Hence, there is an urgent need to create a culture of ‘risk tolerance’ instead of ‘risk avoidance’ or ‘risk denial’ in the organization for effective commercial governance.

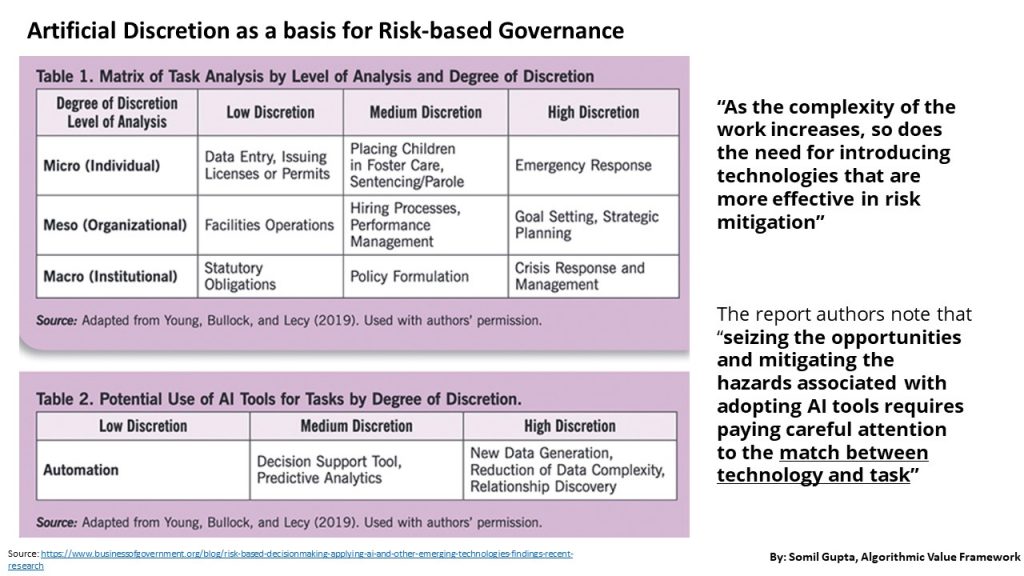

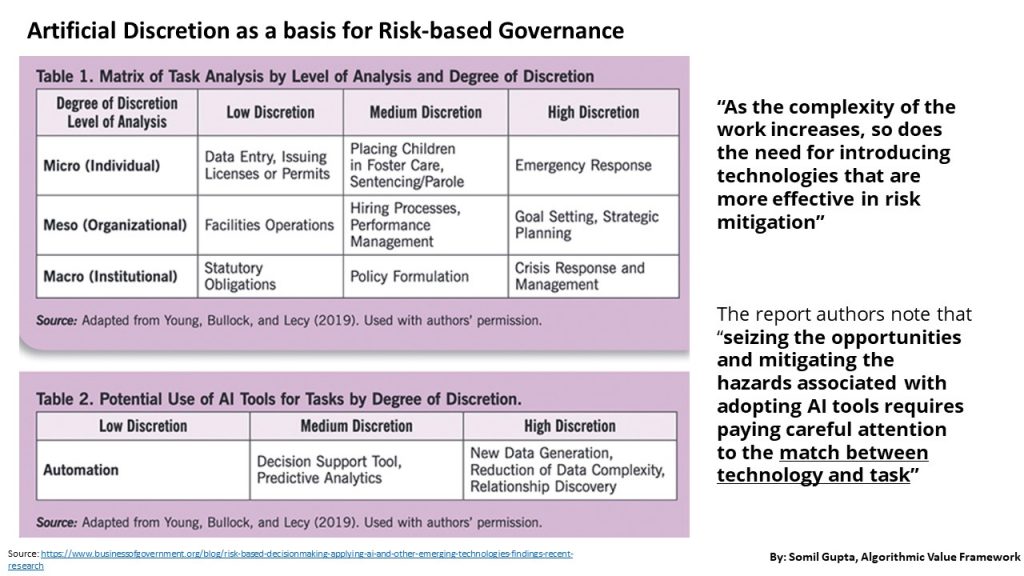

The framework below describes one such model for risk-based governance in a public administration setting. In this case, risks are assessed not based on the nature of the model rather based on the ‘complexity’ of the decision task for a human decision-maker.

The aforementioned framework assesses task complexity on two dimensions:

- ‘Degree of Discretion’ needed by decision-makers to complete the task effectively

- The level of impact of decision (micro-meso-macro or operational-tactical-strategic)

AI’s appropriateness for a task is a function of the task complexity, quality, and availability of data, technical requirements, limitations of the AI system, risk and uncertainty associated with the task, and the political feasibility of using nonhuman solutions. And the optimal use of AI depends upon how it is used to augment or automate the exercise of administrative or managerial discretion at different decision impact levels.

Analysis of task complexity using managerial discretion in this way can be used to derive intrinsic and extrinsic risks and governance requirements “independent of the technical analysis” (as discussed in the previous posts in this theme). A culture of risk tolerance can then be developed using cost-benefit frameworks incorporating all benefits and costs including socio-economic issues like ethics and fairness to give policymakers the ability to:

- Plan scenario and estimate confidence intervals while building the business case for AI systems over time taking into account both quantifiable financial costs and intangible risks like bias or privacy.

- Compare the risks associated with AI with the benefits throughout the lifecycle of an algorithm’s development and operation.

Risk governance is one of the greatest challenges for the organization because the greatest concern in the implementation of AI is the readiness and ability of people to understand and work with these new solutions. Also, companies can’t just ‘apply’ an AI-based Risk Management solution. They instead require a continuous evaluation of ML solutions for different risk categories.

Using risk-based frameworks could be a great way to communicate risk and decisions about how and when to use AI – including risks of leveraging AI to support a decision, relative to risks of decisions based solely on human analysis. It also provides incentives for increasing confidence in data through proper data quality, security, data user rights, and fairness. The question is how seriously are companies considering risk management in their overall Data/AI strategy? While business leaders these days have an intuitive understanding of the risks, we are still far from having AI Risk Management as a separate discipline within organizations.

Potential Solution and Way Forward

Since the source of this article is a series of LinkedIn posts, it was a great opportunity to source ideas from practitioners and two of them stand out very well as a conclusion.

- Bridge the Gap Between Management Theory and Practice

While the organising principles for a new management thinking is well researched in academia. The ideas dating back to the 60s and 70s are more relevant than ever but never bridged into practice. The way forward is that we need to work on this and address this work based on TRL (Technology Readiness Level) 1-9. Having a firm foundation in the research but then moving it to practical application which means developing concrete methods, organisation constructs, roles and ways of working.

This insight about what is needed and how we can address it, has matured from 2021 but our main challenge is to close the gap from theory, to concepts to keynotes/posts to real education and practical use. At the same time, be careful that we do not lose the target Audience on the way by making it too complicated. This means addressing the agenda in a relevant way for the Executive, Tactical and Operational layer of an organization.

- Design for Safety Principles for AI

Design for Safety principles are likely very useful tools to get AI based solutions meeting real life in X / safe manner. Automation dreams are true but autonomous and risk and capabilities goes hand in hand. Risk and design for X based approach for applying human-machine interplay AI solutions may make them more readily available and on the other hand more robust against failure and resilient for risk realisation.

To summarize, a risk-based view of AI is very helpful for planning and solving commercial and social challenges and the impact of AI provided a structured approach is followed for risk monitoring, assessment, control, and mitigation across the organization through a combination of technical and commercial governance measures.

References:

[1]: https://www.protiviti.com/US-en/insights/whitepaper-nextgen-risk-management, accessed 14.03.2022 [2]: Žigienė, G.; Rybakovas, E.; Alzbutas, R. Artificial Intelligence Based Commercial Risk Management Framework for SMEs. Sustainability 2019, 11, 4501. https://doi.org/10.3390/su11164501 [3]: https://www.canada.ca/en/government/system/digital-government/digital-government-innovations/responsible-use-ai/algorithmic-impact-assessment.html , accessed 18.03.22 [4]: https://www.businessofgovernment.org/blog/risk-based-decisionmaking-applying-ai-and-other-emerging-technologies-findings-recent-researchAbout the Author:

Somil Gupta is an AI Strategy and Monetization Advisor based in Sweden. He specializes in developing commercialization and value realization strategy to grow data-driven business and monetize investments in data and AI. He has been awarded the Nordic Data and AI Influencer 2021 award for his work in this field. Somil consults clients in bridging the gap between their data/AI vision for the business and their current capabilities to realize that vision. In addition, he also supports companies through ‘pro-bono’ work through the open-source community for Data and AI – Airplane Alliance where Somil is the chapter lead for Data and AI Commercialization chapter.

Featured image credits: Joshua Woroniecki on Pixabay

The views and opinions expressed by the author do not necessarily state or reflect the views or positions of Hyperight.com or any entities they represent.

Add comment