This year promises to be really fruitful for AI if the latest image-generating model released by OpenAI – DALL·E is any indication. OpenAI released DALL·E only 5 days into the New Year. This article will explore what makes the latest OpenAI model so exciting for the AI community.

DALL·E is a 12-billion parameter version of GPT-3, a transformer language model, which generates images from text. The neural network’s name is a play on words combining surrealist painter Salvador Dali and Pixar’s science fiction bot, WALL.E, hinting to its intended ability to transform words into images with uncanny machine-like precision.

Its predecessor GPT-3 – a powerful natural language processing model, which OpenAI released in July last year, amazed the world with its ability to generate human-like text, including Op-Eds, poems, sonnets, and even computer code.

Built on top of the revolutionary predecessor GPT-3 Model, DALL·E is trained to parse through text prompts and generate breathtaking images using a large dataset of image-text pairs.

It’s designed to receive both the text and the image as a single stream of data packing up to 1280 ‘tokens’, where a token refers to a particular symbol from a vocabulary. As an illustration, each letter from A-Z from the English alphabet is a token. In DALL.E’s world, a token refers to both text and image input.

What is impressive about the transformer-based neural network is that it can not only render anthropomorphised images of animals and objects, but also do text rendering, transform existing images, combine objects and concepts in a single image and complete missing parts of an image.

What DALL·E can do

OpenAI provided a detailed description using a series of interactive visuals and the text prompts to illustrate DALL·E’s capabilities.

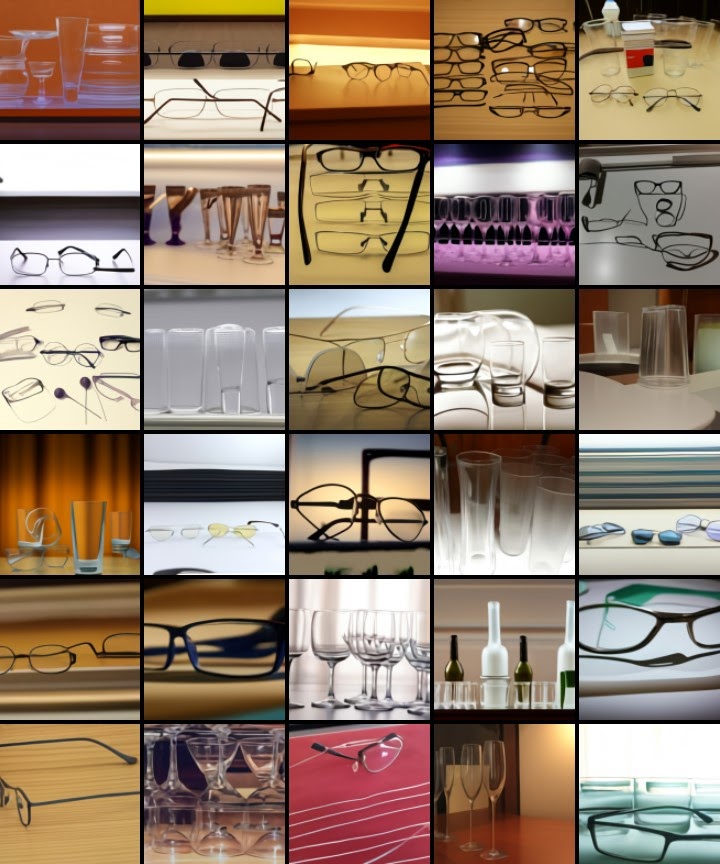

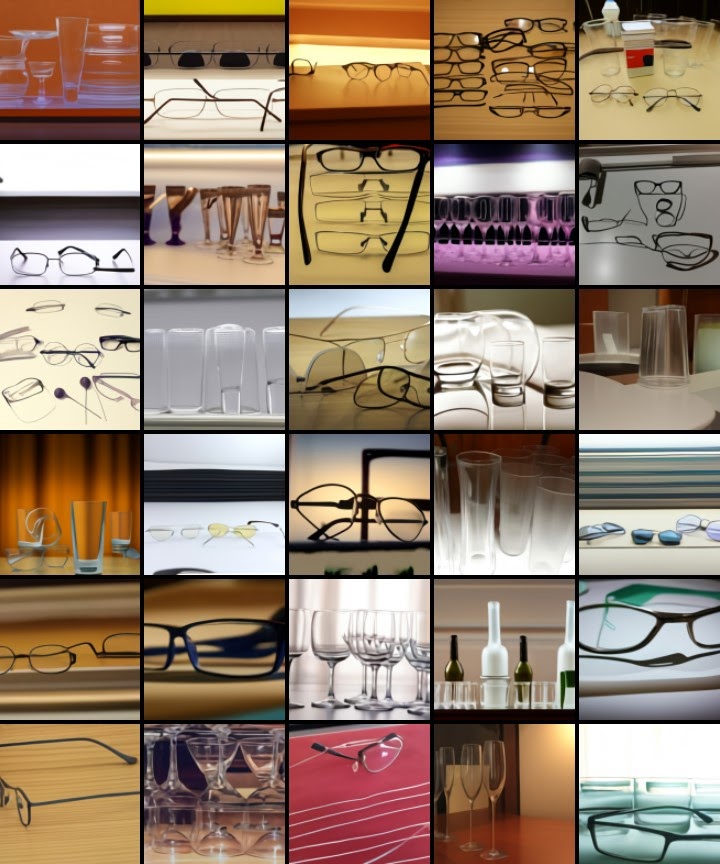

Changing object attributes and the number of times they appear in the image

DALL·E can draw multiple copies of an object when prompted to do so, but can’t count past three. Open AI also found that when it’s prompted to draw objects for which there are multiple meanings, such as “glasses,” “chips,” and “cups” it sometimes draws both interpretations.

Image source: OpenAI (Screenshot by Author, Permission granted by OpenAI)

Drawing multiple objects simultaneously and controlling their spatial relationship

DALL·E correctly responds to some types of special positions, but not others. For example, Open AI saw that “sitting on” and “standing in front of” sometimes appear to work, “sitting below,” “standing behind,” “standing left of,” and “standing right of” do not.

Image source: OpenAI (Screenshot by Author, Permission granted by OpenAI)

Control over the viewpoint of a scene and the 3D style in which a scene is rendered

DALL·E can draw each of the animals in different views. It’s interesting that some of these views, such as “aerial view” and “rearview,” require knowledge of the animal’s appearance from unusual angles. While others, such as “extreme close-up view,” require knowledge of the fine-grained details of the animal’s skin or fur, reports OpenAI.

Image source: OpenAI (Screenshot by Author, Permission granted by OpenAI)

Visualising the internal and external structure of an object

DALL·E can also draw the interiors of different kinds of objects, as on the walnut images below. Open AI finds that DALL·E can draw the interiors of several different types of objects.

Image source: OpenAI (Screenshot by Author, Permission granted by OpenAI)

Inferring contextual details

DALL·E can sometimes render text and adapt the writing style to the context in which it appears. For example, it recognises that text prompts like “a bag of chips” and “a license plate” require different types of fonts, compared to “a neon sign” and “written in the sky.

Image source: OpenAI (Screenshot by Author, Permission granted by OpenAI)

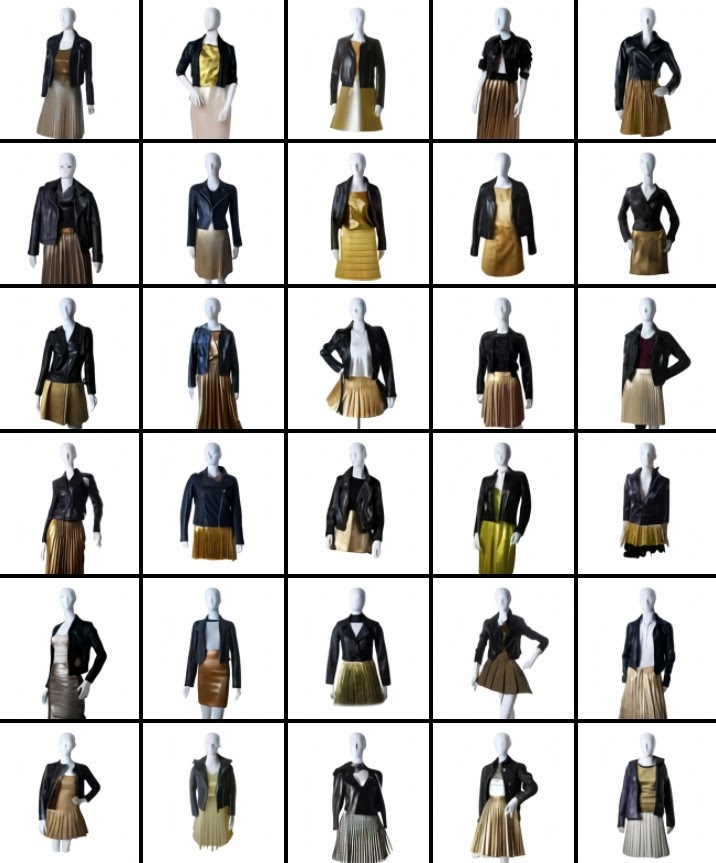

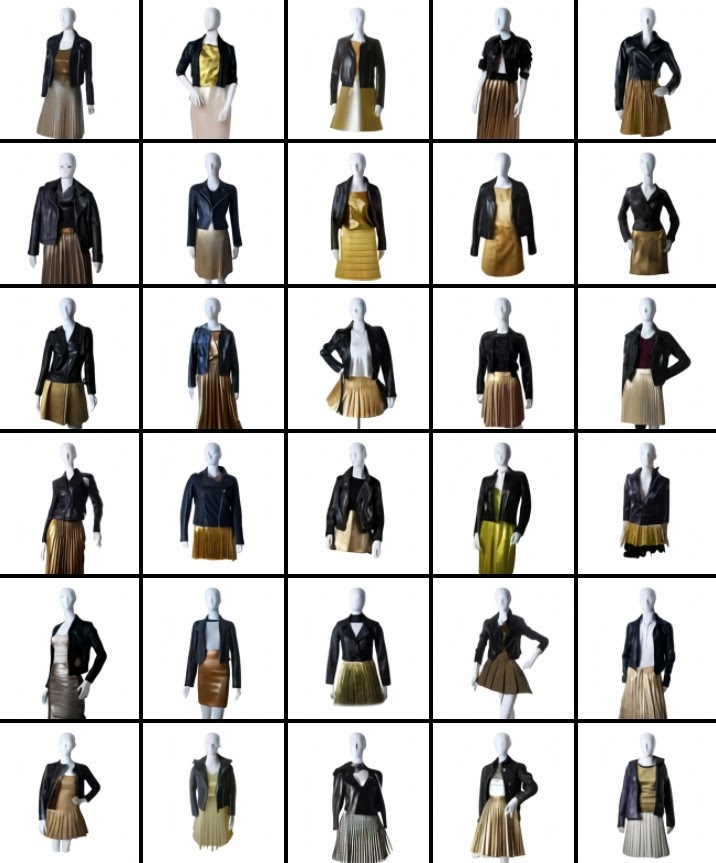

Create fashion and interior design pieces based on preceding capabilities

DALL·E can render unique textures such as the sheen of a “black leather jacket” and “gold” skirts and leggings.

Image source: OpenAI (Screenshot by Author, Permission granted by OpenAI)

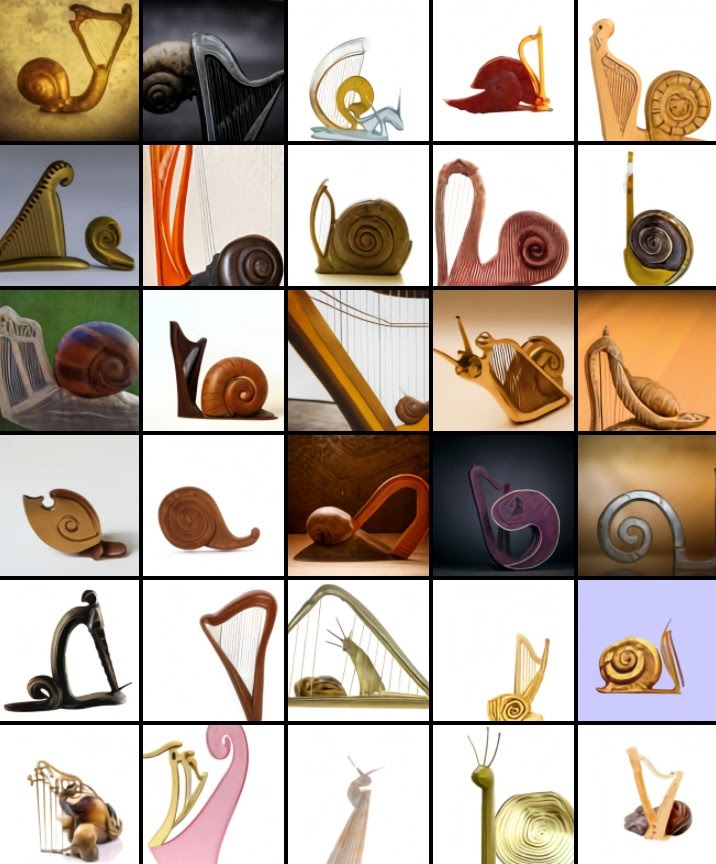

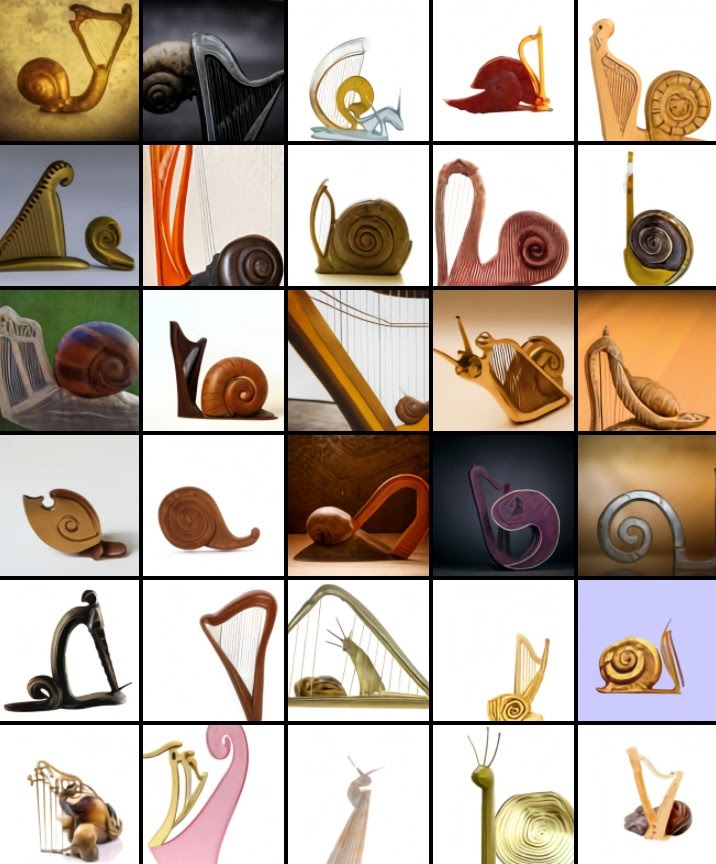

Combining unrelated concepts and creating realistic objects

DALL·E can also combine disparate ideas to synthesise objects, some of which are unlikely to exist in the real world, OpenAI discovered. However, it sometimes fails to bind some attribute of the specified concept and just draws the two as separate items.

Image source: OpenAI (Screenshot by Author, Permission granted by OpenAI)

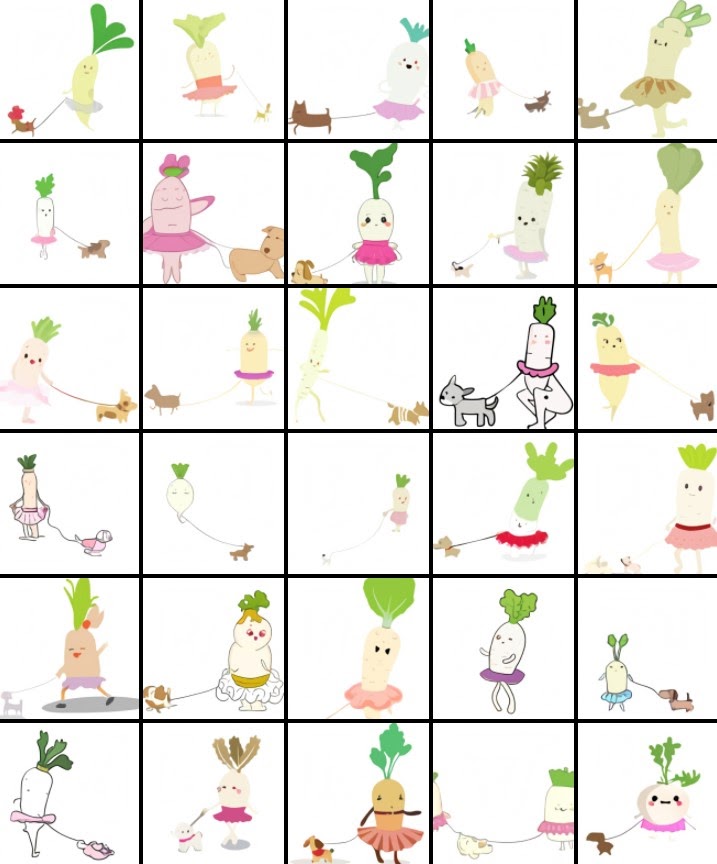

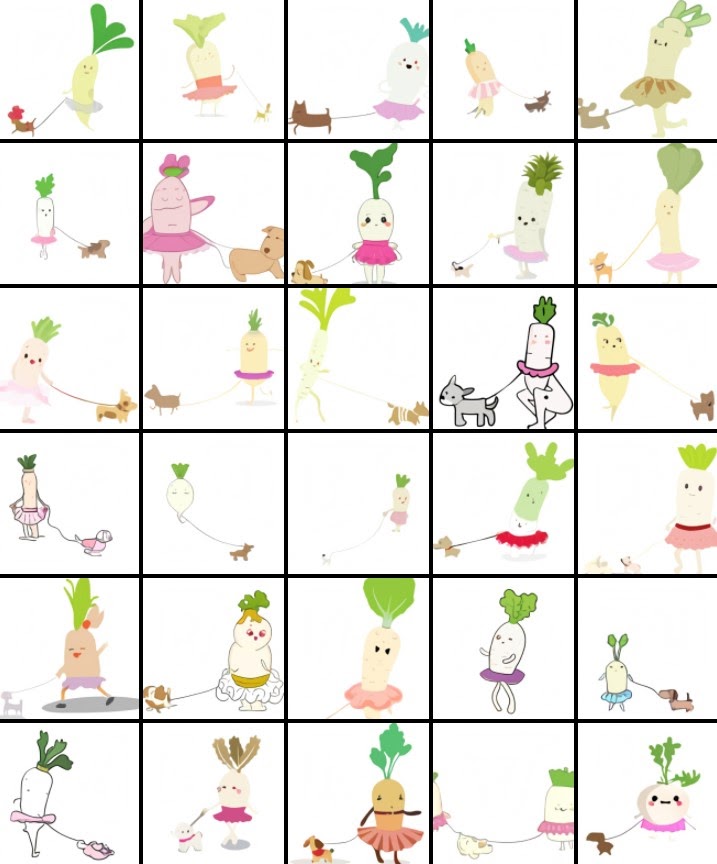

Animal illustrations and anthropomorphised versions of animals and objects

Besides combining unrelated concepts to generating images of real-world objects, DALL·E can also transfer some human activities and articles of clothing to animals and inanimate objects, such as the daikon radish below.

Image source: OpenAI (Screenshot by Author, Permission granted by OpenAI)

Performing zero-shot visual reasoning

DALL·E extends GPT-3’s ability to perform tasks only from a description and a cue to generate the answer, without any additional training. This way, the transformer model can perform several image-to-image translation tasks when prompted with the right description.

Image source: OpenAI (Screenshot by Author, Permission granted by OpenAI)

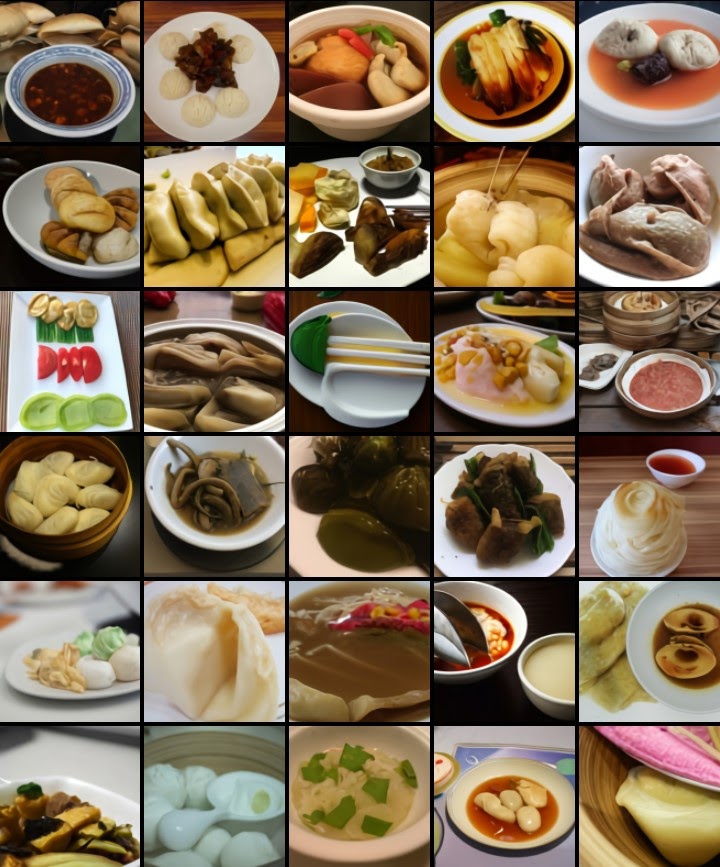

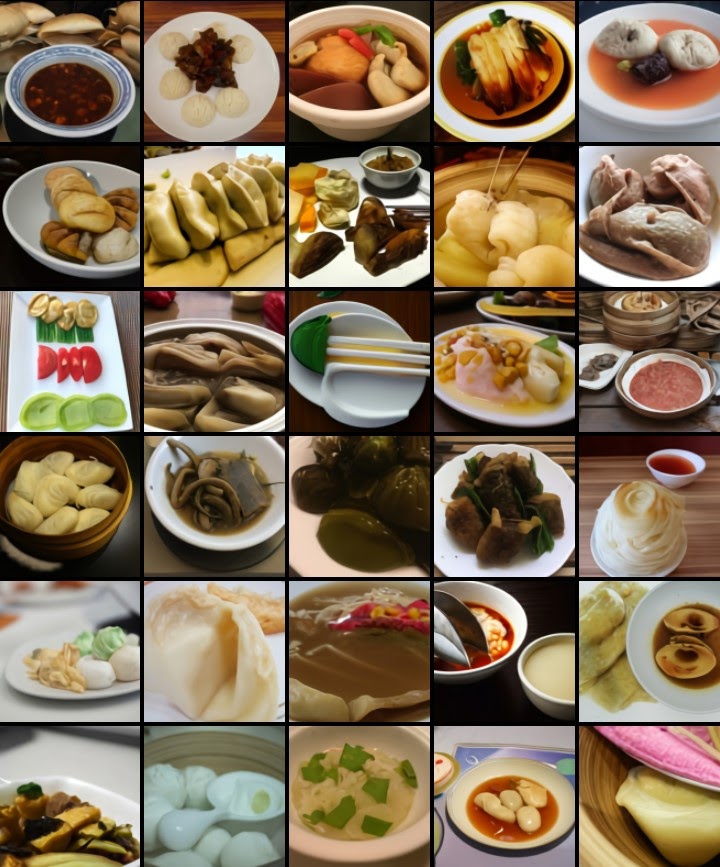

Understanding of geographic facts, landmarks and neighbourhoods

OpenAI reports that DALL·E has knowledge of country flags, cuisines, and local wildlife. However, it often reflects superficial stereotypes for food or wildlife instead of presenting the diversity from the real world.

Image source: OpenAI (Screenshot by Author, Permission granted by OpenAI)

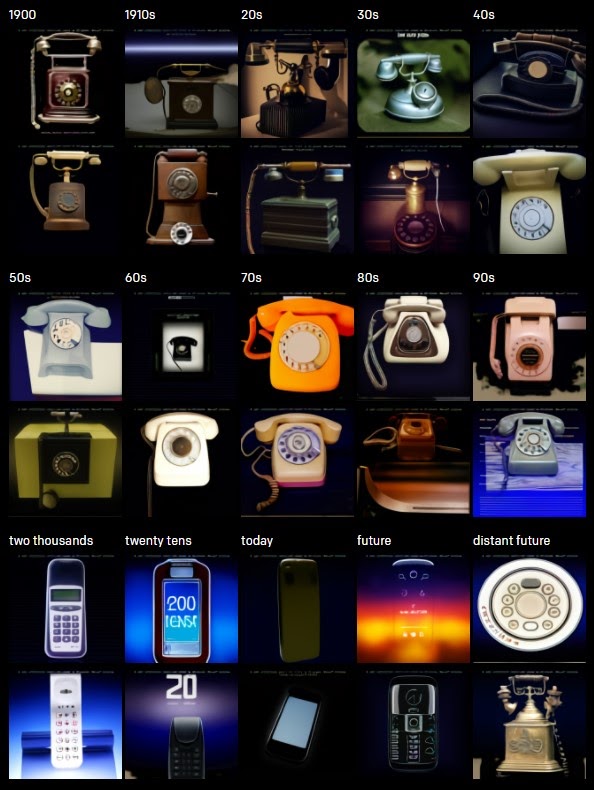

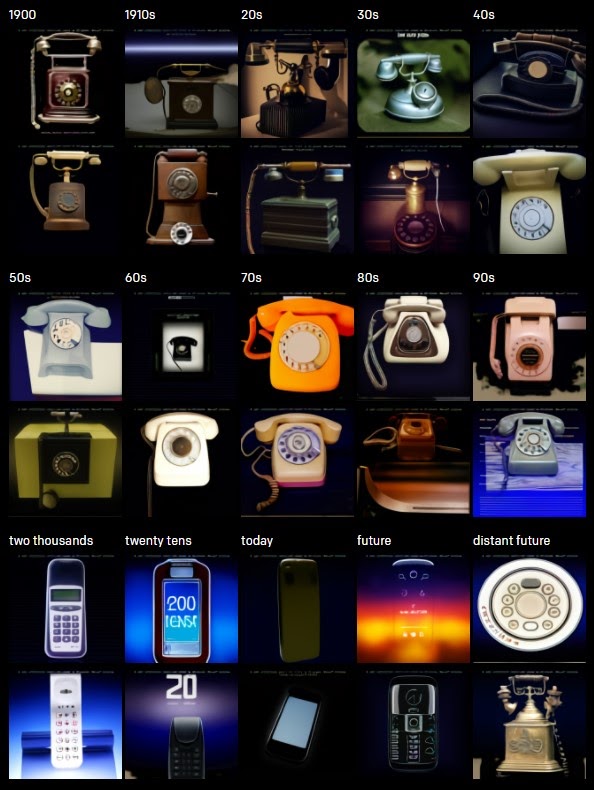

Reasoning temporal knowledge

Besides presenting objects that vary over time, DALL·E shows knowledge of concepts that vary over time. It has learned about basic trends in design and technology over the decades, as seen from the photo of “phone” from various decades.

Image source: OpenAI (Screenshot by Author, Permission granted by OpenAI)

Reservations towards and limitations of DALL·E

As seen from the AI-generated images above, DALL·E’s skills are impressive. It can not only paint a picture, but it can answer questions visually promoted by text descriptions. However, experts are pointing out to its limitations and expressing some reserve despite its promising applications.

One limitation that transpires through all sets of images is that DALL·E tends to get overwhelmed with longer strings of text becoming less accurate with the more added description. Additionally, it often reflects cultural stereotypes in the images, for example, generalising Chinese food as simply dumplings, and not showing the diversity of the cuisine.

Some people also point out that all we know about DALL·E’s capabilities come from one blog post by OpenAI. Experts are still in anticipation of the full paper about the methodology to get the full picture. The results might also be cherry-picked, although it’s stated in the blog post that they are not – something that would be potentially dispelled with a benchmark analysis in the full paper.

Several sources also suggested that although DALL·E delivers impressive results, it should be mistaken for general artificial intelligence. Based on the failed promises of the models that are previously acknowledged as revolutionary, it would be hard to trick these models into making basic mistakes, states Orhan G. Yalçın in his blog post reviewing the revolutionary transformer language model.

The meaning of DALL·E

Scepticism aside, it’s clear that DALL·E and GPT-3 are two examples of remarkable achievements in deep learning: that extraordinarily big neural networks trained on unlabeled internet data (an example of “self-supervised learning”) can be highly versatile, able to do lots of things weren’t specifically designed for, emphasises Dale Markowitz.

DALL·E is smart enough to come up with sensible renditions of not just practical objects, but even abstract concepts as well. It also shows good judgment in bringing abstract, imaginary concepts to life, as we’ve seen with the images generated by the text prompt “a snail made of a harp. a snail with the texture of a harp.”

Although General Artificial Intelligence is far from reality, DALL·E is a giant leap towards it. With its capabilities to understand the human requests, apply own knowledge, generate photorealistic and unique images, it opens new ways for various applications in marketing, art, design and visual storytelling.

Add comment