The saying “With great power comes great responsibility.” has been relevant to different industries throughout the years, and today it is especially applicable to the AI field.

As organisations move from words to principles, from experimentation to operationalisation and from isolated projects to scaling, it has become essential to know how to do this responsibly and take care of the issues of accountability, responsibility and transparency – ART.

AI has been present for decades, so why are people now worried about this type of system’s ethical use and trustworthiness? Concerns and why we need ethics in AI can be addressed in three ways, according to Virginia Dignum, Professor of Responsible Artificial Intelligence at Umeå University.

- Datification – We are more than our data: not all problems in the world are solved by data collection or data analytics. Moreover, data is not a natural resource, it is something we create for a specific purpose. There is a danger that we are seeing as a metric for success, and only tackle problems for which there is data available. We need to diversify our approach to problem-solving and addressing societal and humanitarian situations.

- Power – Who is developing AI? What are the motivations for using AI? Who is deciding? Those with the power to decide whether or not to develop an AI system, have the power to determine what type of AI we are using, what for, and who the benefits of using AI are for. Much more consensus effort is needed to address this issue, since those deciding are deciding for all of us, and their motivations are the motivations of all of us.

- Sustainability – The cost of AI, and its societal and environmental impact, are factors that must be part of the decisions and metrics for the success of AI.

“Despite of all possibilities that AI systems can provide, people and organisations are responsible, the results and actions of the system are not a responsibility of the computer or the system itself.”, says Professor Dignum, who adds that Responsible AI “is a multidisciplinary field where we need to integrate engineering and natural science, with social science to develop and analyse issues of governance; the legal, societal and economic impact of decisions; value-based design approaches; inclusion and diversity in design.”

This broad and diverse approach should not focus on the effects of the AI systems, but on how we are developing and designing the AI systems. Or with other words, Responsible AI needs to be considered continually through the AI life cycle.

Just to recap, AI is defined by many in many ways. According to Juan Bernabe Moreno, Chief Data Officer And Global Head Of Analytics And AI, E.ON, AI is defined as “a set of techniques that enable us to find answers to questions we couldn’t answer before just with the data we have”, and the most important technique in the debate about the responsible AI is the is machine learning (ML). To train a good model is the first step of the journey to create value from AI. The true value comes from when the model is deployed and used in a business context. For this to happen, it is needed to have a governance framework and monitor models over time, and replace and retrain the model when needed. If this is not done in this way, models will not reflect the world we are living in. Basically, everything comes down to the data collected by people on which the ML models are trained, but also to the understanding that data is a representation of reality but not reality itself, data that might not address the same population and same group of people and context in which it is being used. There might be Data Hubris, as well as situations where there is not enough data, or not properly evaluated data, lack of accuracy, issues of bias and discrimination, deep fakes, the issues of ‘learning from the crowd’, and how intelligent or not the crowd is (the crowd behind the data that is used).

How to Move From Principle to Practice?

Principles, regulations, guidelines and strategies

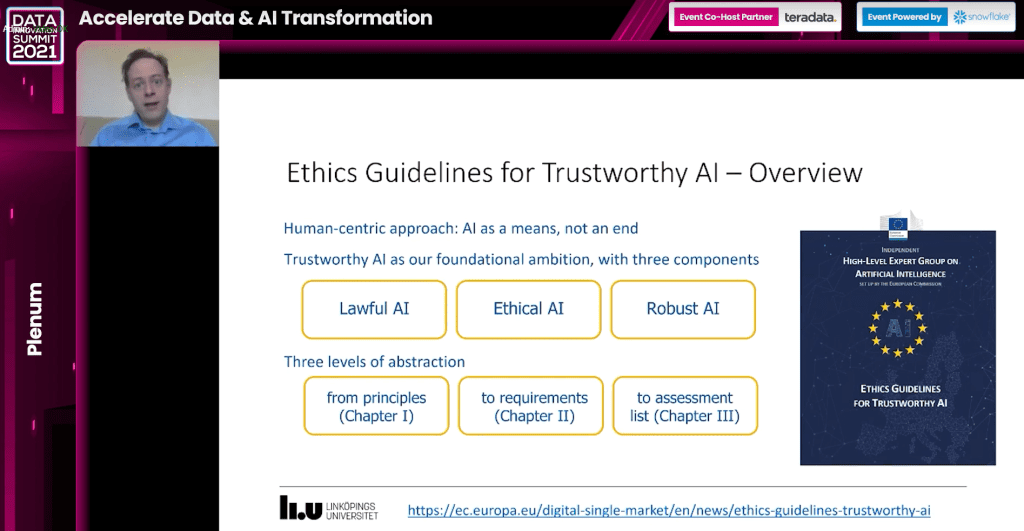

To tackle all issues with AI systems and their trustworthiness, countries and organisations (UNESCO, EU, WEF, OECD, Council of Europe etc.) started to develop AI principles, regulations, guidelines and strategies.

They are all asking for the same things – responsibility, transparency, concern for democratic values and human rights. Regulation should: ensure public acceptance and drive transformation and business differentiation. According to some experts, there is sometimes a lack of consistency or even consensus. Also, these regulations are described at a high level of abstraction. The difficulty for everyone working with developing and using AI systems is how to translate these words into concrete applications.

The conclusion is there is an appetite for guidelines for ethical AI, and the European approach to Trustworthy AI is a clear stand that individuals and societies want AI, not just any AI. They want AI that they can trust.

The ethical guidelines of the European Union were released a few years ago, and the last move is the proposed AI ACT. In contrast, the member states presented risk-based approaches, including unacceptable risks, “transparent” risks, high-level risks and minimal or no risks regarding the usage of AI and systems. The EU developed the Assessment List for Trustworthy AI (ALTAI). Organizations can follow this list of requirements to see if they meet them or not through internal evaluation and quality assurance processes.

In this article, we want to share “The Ethics of AI Ethics”, a document that lists, evaluates, analyzes and compares 22 guidelines, highlighting overlaps but also omissions top code of ethics and code of conduct and what kind of issues they address, for example, privacy protection, accountability, fairness, explainability etc.

Operationalisation of Responsible AI

A lot of work has been done in terms of standards and in terms of large organizations coming with their own processes. There are a lot of tools for assessment, monitoring and auditing of AI systems and a lot has been done for awareness and participation with education and training.

Josefin Rosén, Nordic Leader AI & Analytics at SAS Institute and DAIR Awards winner in 2021 for “AI ethics professional of the year”, describes these four key recommendations when operationalizing responsible AI:

- Communicate and create awareness around the topic and the importance of Responsible AI in organizations. Work with the team that is developing and deploying AI applications to come up with a set up of practical guidelines for responsible AI and treat them not as a topic but as a key part of the AI and analytics strategy.

- Diversity is a key effort in order to avoid that individual biases are being reflected in the data and then also in the AI application since it learns from the data. Everyone in a team brings different things to the table, reflects different things in the data, asks different questions, and makes different mistakes. Like in other areas also in AI, organizations need to consider many different aspects: background, gender, culture, ethnicity, skillset, knowledge, age etc. Diverse teams are strong teams.

- Embed responsible AI throughout the AI life cycle. When the EU proposed to regulate AI, one of the main concerns from the field was that it could potentially slow down innovation and that documentation, reports and assessments requested would become bottlenecks that could delay the deployment. This is important because it is where the balance happens: keeping a competitive advantage and protecting the citizen.

- Work together and alongside the machines. Form strong synergistic teams that can scale responsible AI, create business impact and trust in systems that can act fast and accurately also over time.

Responsible AI in Practice

Retail is one of the industries where we can spot questioning of the trust in AI systems and how to build and maintain trust between organisations and customers.

Many reports show that consumer trust is at risk regarding digitalisation, data and AI in retail. For example, according to reports, only 25% of Swedish consumers believe that Swedish retail companies can process their data safely and securely. About 44% are worried that the personal information they share in digital forms is being used in a way they are uncomfortable with. About 67% think that increased personal data use is generally negative.

ICA Sweden, one of the biggest retailers in Sweden, took action upon these numbers by defining an accountability framework specifying how data is managed; orchestrated the resources, processes and technology to manage data in compliance with laws and regulations and ICA’s principles and values, all assuring that the company is customer focused, protecting their integrity and providing them with the best service on the market. Based on these actions, ICA’s last survey showed that 63% of their customers believe that the company collects data to offer more relevant services. The company concluded that “the issue is not creating trust, but maintaining”. In practice, this means collaboration:

- Data owners (the business) collaborate with IT and data management and analytics. The company has a team for customer communication, an excellent example of collaboration between the business and IT. The business is in charge of the customer master data and the definition of new customer-facing communication. The company has a recommendation engine that analyses customer purchasing behaviour and makes recommendations based on this. But the decision on what recommendations are actually used is taken care of by the business where the technology is built by the technical team: data engineers, data architects and data scientists.

- Active involvement of the information security department at all levels during daily business, ensuring customer integrity is protected at all times. The company has specific roles like data protection managers and guardians that are a resource on all development projects involving the use of customers’ data to ensure compliance and maintain customer focus throughout the process.

- According to GDPR, the customer has the right to demand the extract of all data the company keeps. They also have the right to request to remove all consumer records. This transparency is essential in customers sharing the data with the company. Even more critical factor is the level of improved services that the customer reserves from sharing data. For example, loyal customers get concrete payback throughout the most purchased purchases. Customers are requested if it is possible to handle even further information about them like dietary or allergy so even better service can be provided for them. This sometimes conflicts with the general laws and regulations the individual customer might wish for. So the company must be careful in balancing the risk of conflicts with the customer and the laws.

Another positive example of Responsible AI in practice comes from the fashion industry and the journey at H&M Group. You will have a unique opportunity to hear more of it at the tpo33 event. This time, this event is focusing on “The Modern Data Strategy for Trustworthy AI”, and among the speakers will be Linda Leopold, Head of Responsible AI & Data at H&M Group will share more on “Creating and implementing a Responsible AI & Data Strategy”.

Conclusion

Should we regulate the technology or the effects of the technology? What is the positive or negative impact of regulations? Why it’s unethical to discuss AI ethics? There is an ongoing discussion on these topics. But responsibility and ethics will stay on the top of the agenda for some time in the future because any approach to a challenge will involve: the merged strengths of people and machines, education and increased understanding and competence, and being part of a large ecosystem that provides access to knowledge, data or competence.

Featured image credits: ThisisEngineering RAEng on Unsplash

Add comment