A report from 2020 on the state of AI states that 50% of companies reported that they had adopted artificial intelligence in at least one business function. In the last few years, there has been an increase in the number of companies that implemented artificial intelligence and machine learning across hundreds of use cases, making it impossible to perform manually.

At the same time, the deployment of AI solutions and machine learning models in enterprises needs to be operationalised. Data scientists and ML engineers have tools to create and deploy models but that’s just a start. The models need to be deployed in production to deal with real-world use cases.

However, the daunting fact is that around 90 per cent of machine learning models never make it into production. In other words, only one in ten of a data scientist’s workdays actually end up producing something useful for the company. Those models that do make it production take at least three months to be ready for deployment. This added time and effort build up to a real operational cost, which also means a slower time to value too.

Solving this challenge requires a framework or methodology to reduce manual effort and streamline the deployment of ML models. That framework is ModelOps.

What is ModelOps

Let’s explore what ModelOps means, how it differs from MLOps and if it’s emerging as an approach to help properly manage ML models throughout their lifecycle.

ModelOps has been gaining momentum as an approach in AI and Advanced Analytics with a promise to move models from the lab to validation, testing and production as quickly as possible while ensuring quality results. It enables managing and scaling models to meet demand and continuously monitor them to spot and fix early signs of degradation.

Many resources offer somewhat similar definitions of ModelOps.

Stu Bailey, Co-founder and Chief Enterprise AI Architect at ModelOp, describes ModelOps as enterprise operations and governance for all AI and analytic models in production that ensures independent validation and accountability of all models in production that enable business-impacting decisions no matter how those models are created in KDNuggets.

Ajitesh Kumar states for Vitalflux that MLOps (or ML Operations) refers to the process of managing your ML workflows. It’s a subset of ModelOps that focuses on operationalising ML models that have been already created or are being actively used in production.

While Kirsten Lloyd states that ModelOps is the missing link for today’s approach, connecting together existing data management solutions and model training tools to the value delivered via business applications in Data Science Central. She further continues, “ModelOps has emerged as the critical link to addressing last-mile delivery challenges for AI deployments; it is a superset of MLOps, which refers to the processes involved to operationalise and manage AI models in use in production systems.”

ModelOps is how analytical models are cycled from the data science team to the IT production team in a regular cadence of deployment and updates. In the race to realise value from AI models, it’s a winning ingredient that only a few companies are using, says Jeff Alford, SAS Insights Editor. It allows you to move models from the lab to validation, testing and production as quickly as possible while ensuring quality results, he adds.

The difference between ModelOps and MLOps

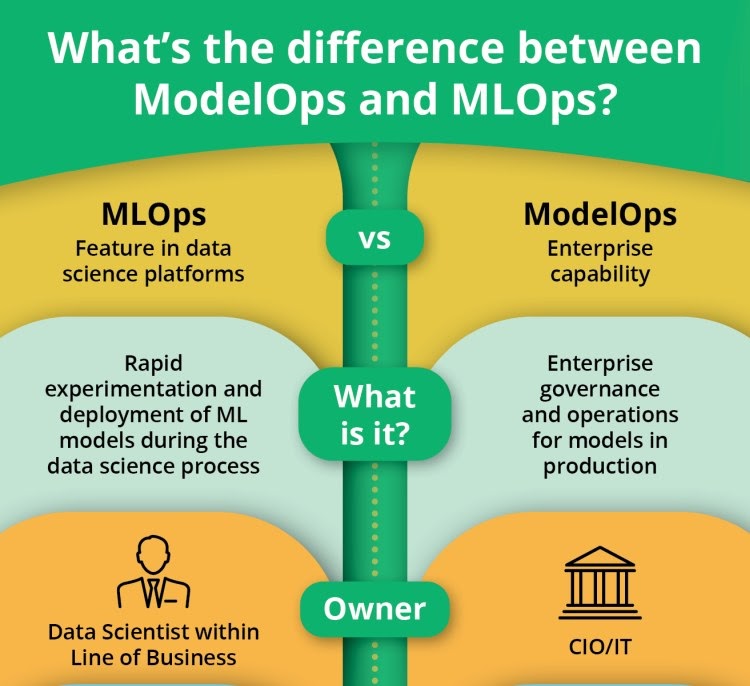

As we can see from the infographic above,

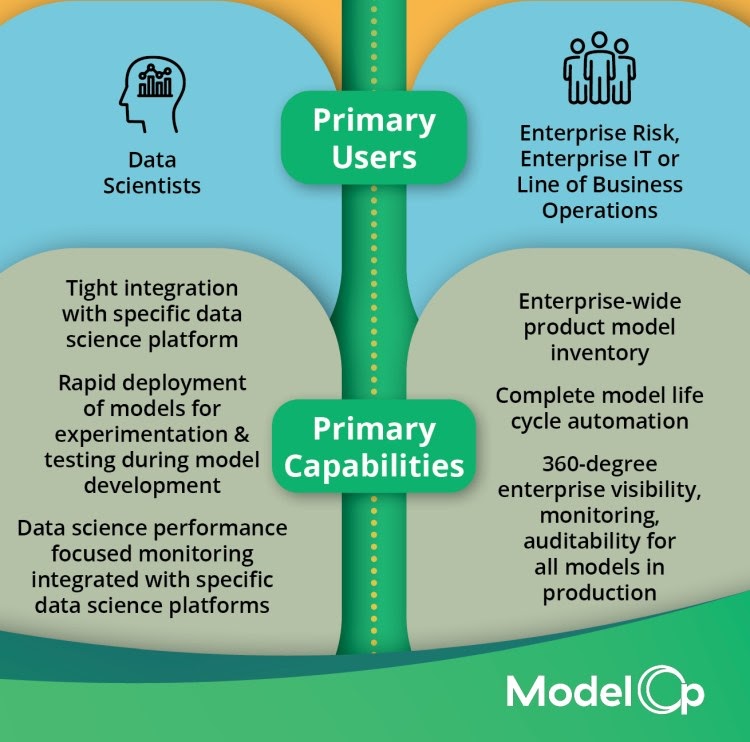

- MLOps is a feature in a data science platform and helps with the rapid experimentation and deployment of ML models during the data science process. Owners of MLOps are data scientists within the line of business.

While

- ModelOps is an enterprise capability that facilitates enterprise operations and governance for models in production. The owners of the ModelOps is the CIO/IT department, and primary users are Enterprise Risk, Enterprise IT or Line of Business Operations.

The activities performed as part of MLOps are:

- Model training/retraining

- Model deployment and integration with data pipelines or ETL workflows

- Integrate ML models into production workflows and systems

- Automate ML model lifecycle management (for example, versioning and releases)

- Monitoring model performance in production, updating models when needed to reflect new information (for example, adding a new input feature)

- Integrating ML results into strategic business processes such as decision-making for marketing campaigns or sales forecasting.

ModelOps tools, on the other hand, enable operationalisation of all AI models, at the same time allowing collaboration amongst various teams and stakeholders involved in building AI-enabled applications (data science teams, machine learning engineers, software developers). Moreover, ModelOps tools supply business leaders with dashboards, reporting and information, which helps to create transparency and autonomy among teams and enable them to work collaboratively with AI at scale.

Value and benefits

With ModelOps in place, all information is governed, tracked and auditable. This not only provides transparency into AI across the enterprise, but it’s also essential for monitoring model performance, drift detection, retraining AI models and insight into AI health.

Teams can better manage and plan for infrastructure costs, while also maintaining control over access to sensitive business data through governance and role-based access control. By automating the logging and tracking of this information, data science teams, machine learning engineers, and software development teams can focus on building and maintaining systems, while business and IT leaders can easily access reporting metrics for ongoing monitoring.

Experts also state that ModelOps will be one key to unlocking value with AI for the enterprise. In a way, ModelOps acts as the connective tissue between all the other parts of the AI pipeline – data management, data wrangling, model training, model deployment and management, and business applications.

In practice, ModelOps is like a bridge between data scientists, data engineers, application owners, and infrastructure owners. It fosters dynamic collaboration and improved productivity.

Objections against ModelOps

However, the data community is divided when it comes to the ModelOps existence and its relevance for streamlining operationalisation of AI and ML models. Data professionals are adamant that ModelOps is nothing more than a buzzword that doesn’t contribute in any meaningful way, and it basically is just MLOps marketed through a new term. “Why would not MLops be able to ‘govern and manage production models’?” asks Anders Arpteg, Director of Data Science at Swedish Security Service.

All the “Ops” basically boil down to making different disciplines work together in the same teams, sharing the same goals, states Lars Albertsson, Founder of Scling.

- DevOps = Developers + Quality Assurance (QA) + Operations

- DataOps = Data Engineering + Developers + QA + Operations

- MLOps = Data Science + Data Engineering + Developers + QA + Operations

ModelOps does not add a new combination, adds Lars.

Olof Granberg, Director of Data and Advanced Analytics technology at ICA Gruppen, questions the need for the level of enterprise governance when most of the industry is going towards decentralised teams. “Maybe the centralised governance is then how to document and monitor models but not actually monitor them centrally,” Olof adds.

Moreover, MLOps capabilities can be supplied centrally where a number of data scientists are the primary stakeholders, just like how DevOps stacks are supplied by central teams. Handover of content/code/functionality is rarely efficient, Olof states.

Conclusion

ModelOps is evidently positioning itself as the solution for organisations that are scaling up their Enterprise AI initiatives. At the same time, it promises to help significantly decrease the model debt incurred and automate the process of deploying AI solutions and model updating.

ModelOps platforms also tackle some of the most pressing challenges in AI adoption – transparency and explainability. By providing information and insights tailored to business leaders in a way the business can relate to, ModelOps promotes trust, which leads to increased AI adoption.

Meanwhile, we also see highly-charged objections by prominent data professionals in the field directed towards the need, usefulness and value of ModelOps.

In the end, ModelOps holds some prospective commitments, and we’ll see how it lives up to them and if it truly brings value for scaling AI initiatives in the next few years.

Add comment