Building a predictive maintenance programme starts with implementing sensors and IoT, but the final goal is the value.

Tetra Pak, the company which revolutionised food packaging with the tetrahedron-shaped plastic-coated paper carton, has been on a predictive maintenance journey for several years now. Noah Schellenberg Data Science Manager at Tetra Pak walked us through the steps made and lessons learned in building the program at the Maintenance Analytics Summit 2019.

Predictive maintenance before there was data science

Noah took us to the very roots of Tetra Paks’ predictive maintenance programme which goes back over ten years ago. It started as a technology project of implementing sensors and IoT to their equipment, but it was not until 2017 that their Data Science Centre of Excellence – CoE was established when started working with data.

The CoE mission is to elevate the prescriptive analytics journey which is analogous to a customer journey. The need is to understand what are the needs of the customers (internal and/or external) in order to enhance their decision making performance using the scientific method.

Today Tetra Pak offers predictive maintenance as a service which over time can help reduce corrective downtime and predetermined downtime.

The Q&PMC (Quality and Performance Management Center) sends daily advises to the field that prescribe an action based on the predictive models. Models do not just predict, they must also prescribe the action, closely aligned to the business process.

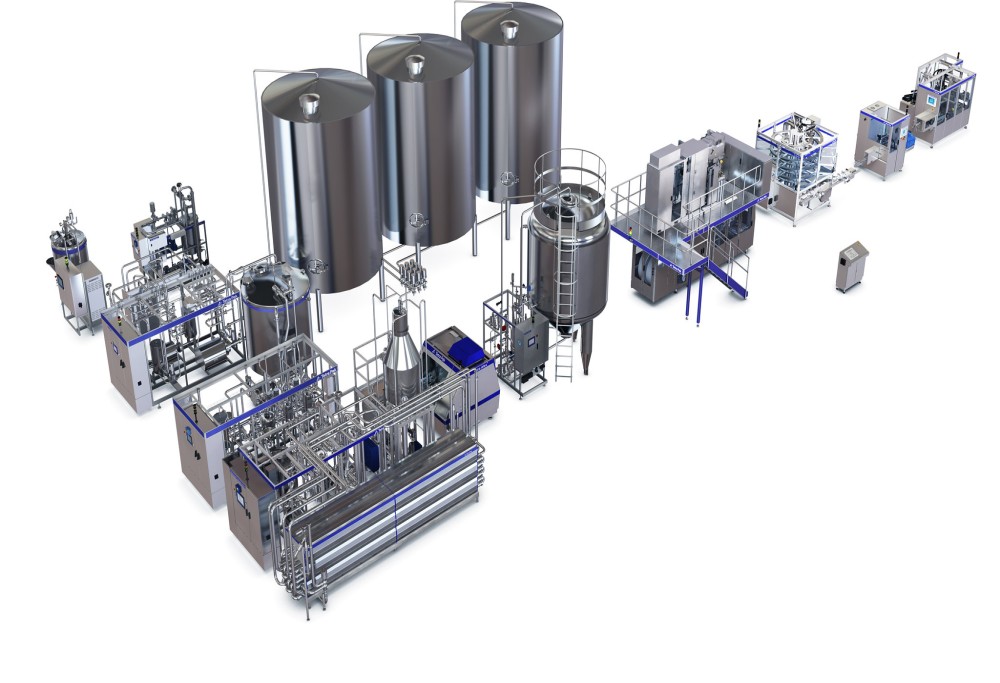

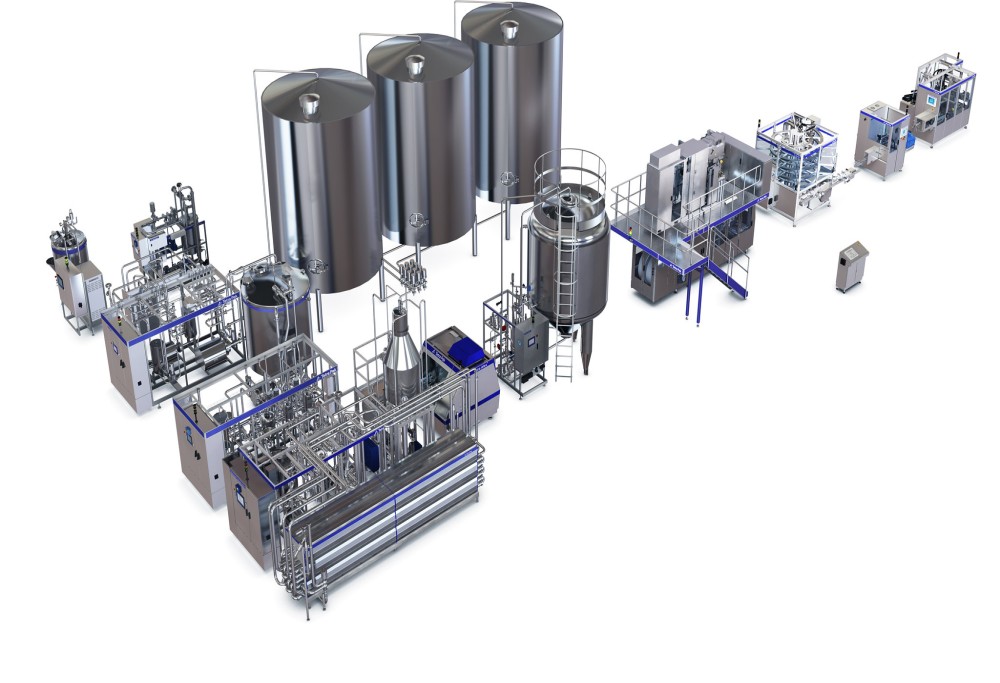

They install sensors to filling machines which are connected to their cloud solution. Based on the collected data, they offer predictions and monitoring of the functions.

But as Noah states, it’s a journey. “[Predictive maintenance] is not just a magic button that from one day to the next you are going to see all the value”, he affirms.

Tetra Pak agenda for predictive maintenance

Their agenda consists of three crucial steps: prioritising, evaluating success factors and sustaining the value.

How to prioritise

Priotisiation in predictive maintenance is the Proof of Value – PoV process. As data science can go out through the entire organisation, and the predictive maintenance agenda is one area where data scientists are involved, priority should be given to the right activities. If projects are not prioritised, data scientists will be just served data and expected to do magic, which usually ends up in frustration on all sides.

As Noah points out, the idea of failing fast was probably a good initial concept, but he warns companies to beware of it and not take it at face value.

Tetra Pak adheres to a 3-step PoV process of:

- Proof of Value

- Deployment

- Service.

However, data scientists are not much involved in deploying and productionising models, which is more of an IT focus. According to Noah, data scientists are interested to know the total opportunity before even starting a project so they know it would result in the most value.

With hundreds of functions and points of interest in the machines, how to prioritise on what data scientists will focus? In Tetra Pak’s case, the first decisions were based on brainstorming and gut feeling, but as their predictive maintenance evolved they started focusing on which project would provide the biggest value and hold the biggest opportunity.

As the statistics show, only 20% of all projects get deployed, so 20% of all total opportunities is the empirical value or the realised projects that can have a cost-reducing outcome.

A formula that helps with prioritisation of projects is assessing whether they hold high or low business value, and if the data is actionable or not. Additionally, you don’t want to start with high complexity problems. Instead, focus on low complex problems but with a focus on high data quality.

What is needed for success

It goes without saying that there are three things you have to have in place without which there is no predictive maintenance:

- Data acquisition strategy

- Quality & governance

- Access tools.

But Noah highlights that it’s also crucial to adopt a prescriptive mindset in data science. And it doesn’t refer to a predictive model that is going to tell you when to do your maintenance. It’s certain actions that operations need to take when a certain function reaches a threshold advised by the system.

Another success factor is a collaboration between data scientists, business and data engineers. “If you want to get the most out of data scientists, don’t let them do data engineer work”, advises Noah. The line between data scientists and data engineers is sometimes blurred, but if they’re busy with data engineering they won’t be able to do what they’re hired to do.

DevOps people should also be included when you reach the deployment model. When you pass the PoV and get an empirical value, the job of IT in deployment might take months. DevOps can take over with the deployment process and run iterations together with business, while data scientists can move on with servicing and continue providing value.

Noah provides “thick” data and failure data as additional success factors. Thick data refers to few data points that are rich in a context which eventually becomes big data as it’s developed. For that purpose, data scientists should work closely with subject matter experts to get the most out of the data, even if it’s only few data points. As Noah states, he doesn’t believe in the statement “there’s not enough data”. They used the thick data principle in their Maintenance Visibility project regarding breakdown identification in equipment.

How to sustain the value

Noah emphasises that in the Proof of Value phase, there’s heightened excitement about the algorithm surrounded by the risk of overfitting it and the pressure to be successful. But as projects move into the service stage and don’t have data scientists’ priority, the models could slowly deteriorate. This is why Noah advises that the model should be under constant monitoring and tracking performance in real-time in the service stage, which doesn’t have to be full-time work for the data scientist. But business needs to feel like they have the necessary data science support.

The true key for sustaining the value of the model lies in reinforcement learning – and no, it doesn’t refer to deep learning. It applies to constant monitoring, engaging with the business, data science taking ownership of the models, while the business takes the actionability of the models where humans are in the loop.

“Data science is a team sport, and it will help improve business decisions”, finishes Noah.

Add comment