Shell, a global company in the energy industry, is on a journey to create an adaptive monitoring system powered entirely by artificial intelligence (AI). In March, the company announced the significant milestone of scaling AI Predictive Maintenance for monitoring and maintaining more than 10,000 equipment in upstream, manufacturing, and integrated gas assets across its global asset base. This is one of the largest deployments in the energy industry today.

“We talk about digitalization many times, but for me, digitalization is a transformational opportunity for the industry and Shell. Digitalization is not new. We capture data from sensors, monitoring thousands of pieces of equipment. What we try today is to analyze data more cleverly, make sense of real-time data in real-time and try to understand how we can, based on the data insights, create value for the business.”, says Dr Maria Papastathi, Head of Operations Digital Transformation at Shell.

Achieving any vision is followed by many challenges, and so is the vision of Shell. What the digital transformation team is trying to do is to provide the end-users in real-time operations a real-time data, trusted data, to help them to compute their models, to analyze and find the fastest in the operations the insight and to do that consistently.

“What we describe here is what we call data fabric. Our model and data platform for the monitoring data foundation is based on the data fabric as a concept.”, adds Jacques Vosloo, Senior Monitoring Domain Expert at Shell.

In this article, you will have a chance to learn about Shell’s journey with developing and implementing a Monitoring Data Foundation (MDF), the challenges and benefits, and the next steps in the digital transformation process.

Modern Technology Supporting Data Fabric

Data fabric, as an architecture, facilitates the end-to-end integration of various data sources and cloud environments through an intelligent and automated system that standardizes data management practices and practicalities across the cloud, on-premises and edge devices.

According to Papastathi and Vosloo, the concept of data fabric was hard for Shell initially. Still, once it started to research the concept, it gained a deeper understanding of what data fabric is and what it will accomplish in the future.

From a digitalization perspective and operation, Shell’s strategy is what the company calls the Asset of the Future. It is an asset that is fully digital, agile, and optimized, enabling flawless real-time operation. It is an autonomous plant helpful for various reasons:

- Limiting the company’s emissions

- Achieving goals from a safety perspective

- Increasing productivity and adding value to the business

The autonomous plant refers to a state where things are being done automatically, where AI, instead of the people, is doing most of the work. The core data concept to getting to this vision is data fabric and eventually a Data Mesh, underpinned by DIY capabilities (data discovery, integration and delivery).

Proactive Monitoring is a highly connected work process with various input and output work processes in Shell’s Asset Management System. Establishing data fabric for input and outputs resulting from proactive monitoring execution makes it immediately consumable in enterprise data warehouses and digital twins because the fabric of data is shared.

Asset monitoring is the early detection of threats or opportunities through structured monitoring of processes and equipment, enabling the Asset Support Team to “find small, fix small”, leading to sustained optimal operations while minimizing process safety and reliability risks.

The company built the Monitoring Data Foundation to integrate technologies used to execute proactive monitoring. Still, they quickly realized that its use could be spanned much wider, magnifying the business impact of data fabric to the entirety of real-time operations.

“The role of Monitoring Data Foundation is to establish lineage with master metadata and activating it to bespoke views that all the different monitoring technologies can make use of that aids rapid deployment of visualization and analytics. And it goes between data providers (at the bottom) and the data consumers (at the top). At the top, you’ve got structured tools that different personas are using, you’ve got discipline engineers looking at specialized monitoring tools for equipment, you’ve data scientists to use datasets to predict things that are going to happen, you’ve got people that want to investigate a particular event (monitoring insight from analytics) that we’ve detected and could navigate through the data. That’s why we have data fabric as a concept.”, explains Vosloo.

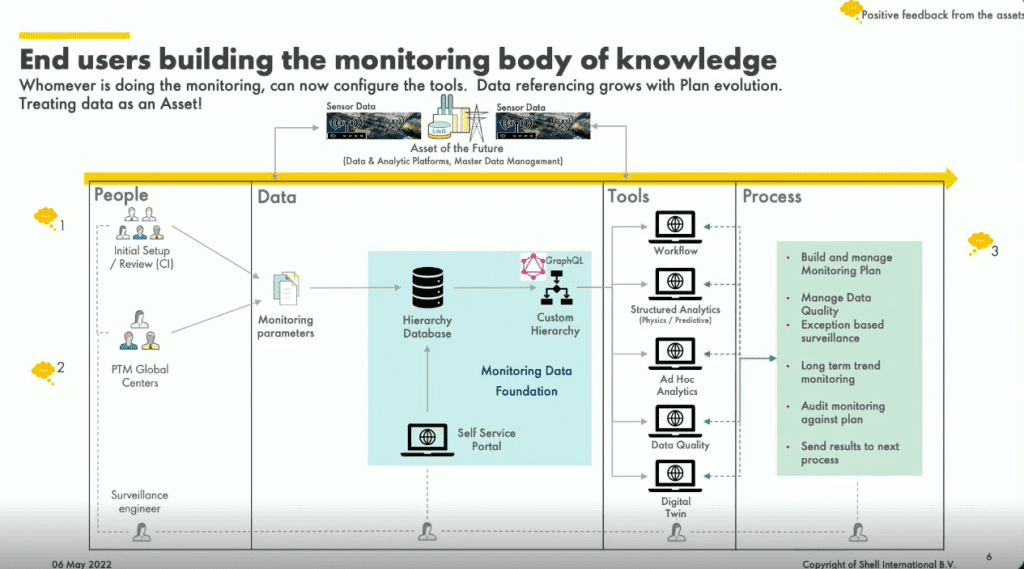

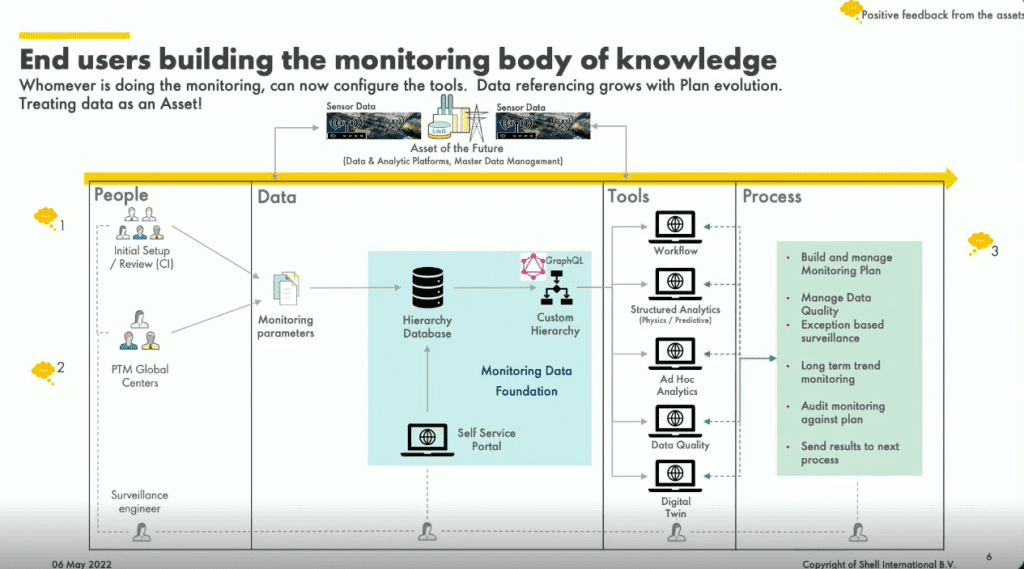

End Users Building the Monitoring Body of Knowledge

Four key factors play an important role in the monitoring of the Asset:

- People

- Process

- Data

- IT Tools

The company starts the monitoring by creating a technical monitoring plan, a set of activities, processes and equipment that are monitored and identifies a real-time data lake that fits into that monitoring. The execution is done across analytics continually. The company uses descriptive, diagnostic and predictive analytics. After events are detected, it is vetted and investigated, and vendors help the team analyze the problem, and maintenance execution work orders are created where remediation action is needed in the field. Shell is trying to integrate the data coming out of their monitoring technologies with the data from the rest of the enterprise. One of the ways how this can help them is to achieve a truly agile condition-based maintenance strategy. To do this, the company ingests data from work processes, master data systems, and requirements from the technologies or tools. The fourth factor in the monitoring process is the people doing the monitoring itself and the planning.

“End users of the data don’t want to go and look for identifiers in a data lake. They want to be able to quickly find what they are looking for in a structure that they recognize, that integrates well with legacy and modern technologies, and that is why we created the Monitoring Data Foundation.”, explains Vosloo.

What Has Shell Done So Far with the Monitoring Data Foundation?

So far, the company has been able to build a self-service model and bring all monitoring technologies together. Instead of re-inventing and rediscovering data, they have done this once and reused it all the time. All the instrumented data used in the monitoring process is catalogued within the monitoring data foundation, which is the right place where you can run data quality rules and where the data quality assurance team remediates data issues. Summarized, some of the achievements that Shell gained with the Monitoring Data Foundation are:

- Flexible interfaces that can handle multiple hierarchies (asset structure mapping) from different sources and transform to a single output for the user to compute models

- Data foundation integration layer sharing of technology-driven insights with data platform such as digital twin and performance reporting platforms

- Data quality and integrity for all real-time data streams cataloguing in the Monitoring Data Foundation

Apart from being able to replicate technology fast, Shell has been able to rationalize legacy technologies. The increase in data usage is the result of the Monitoring Data Foundation. Some other benefits that Shell gained with the MDF are:

- The successful release of the Monitoring Data Foundation has helped simplify proactive Monitoring and enabled decommissioning of legacy IT tools directly, resulting in a cost savings of MMUSD per year.

- Data consumption increased from Gigabytes to Terabytes, but the cost of processing the data has decreased.

- All of this was achieved whiles maintaining uninterrupted IT/OT operations.

“The message here is that if you work smart with your data, you find opportunities to rationalize technology, position IT Tools more clearly with process driven capabilities, limit the functionality overlap, and ultimately for your technology customers, it is clear what different technologies are meant to do.”, adds Vosloo.

The challenges that Shell faced during the change journey are:

- Resistance to modernizing/rationalizing legacy technology

- Creating an understanding of data quality/ownership

- Understanding market disruption technology and its application

- Adoption of market technology is slow and, at times, cost-prohibitive

“Adoption challenges are coming over and over again, where these challenges have nothing to do with technology; it is about people, how they behave, the change they have to go through, and finally realizing what is in it for them. And unless you go through that process of change and help people to realize that by answering questions, you will not sustain whatever has been implemented. You can implement it for a while, but the real value will never be materialized. What we took from this program is to work on the business case and work with people to understand their needs; focus on very small areas to improve, then try to scale it up. If we go the way around (central development to Assets’ implementation), it is usually a failure.”, said Papastathi.

What’s Next for Shell on the Way to Digital Transformation?

Many teams are involved in Shell’s journey to create an adaptive monitoring system powered entirely by artificial intelligence. However, one of the stepping-stones to get there is the implementation of Data Virtualization, which will bring the company’s data fabric full circle and allow for automatic data discovery by humans and machines.

“It’s about creating an autonomous system where machines and humans can discover data. We believe that data or knowledge graphing technology plays a role. We believe having an environment where people can play with datasets, and the whole data mesh concept will play an important role in innovation in this space. Not just a few engineers and scientists to find the data to build compute models, but help the whole company contribute to it, even contractors in a particular knowledge area. That will help us in the next level of the analytics continuum, prescriptive analytics. When it is not up to a human to decide what is next but based on history and input work processes and data from the field, recommendations can be made to the engineers who can take action from there.”, said Vosloo.

Another stop on the journey is the seamless integration of emerging technologies in the conventional and renewable energy business and the acceleration of the Asset of the Future program by using consistent quality data for consuming applications.

“We just scratched the surface here. We haven’t even looked after the data silos for proactive technical monitoring. Imagine what we will be capable of doing by bringing data from all of these work processes together to drive new, more advancing insights. That’s where we would like to go next. There are more players inside Shell that will be part of the journey.”, added Vosloo.

Maria Papastathi and Jacques Vosloo spoke at this edition of the Data Innovation Summit. You can find their presentation here.

Add comment